AI Promises Climate-Friendly Materials

To tackle climate change, scientists and advocates have called for a bevy of actions that include reducing fossil fuel use, electrifying transportation, reforming agriculture, and mopping up excess carbon dioxide from the atmosphere. But many of these challenges will be insurmountable without behind-the-scenes breakthroughs in materials science. Today’s materials lack key properties needed for scalable climate-friendly technologies.

Batteries, for example, require improved materials that can yield higher energy densities and longer discharge times. Without such improvements, commercial batteries won’t be able to power mass-market electric vehicles and support a renewable-powered grid. Likewise, methods for capturing carbon dioxide from the atmosphere will remain prohibitively expensive until better catalysts are developed.

The traditional materials-development pipeline will struggle to deliver the needed innovations. Designing new materials with specific properties can be excruciatingly hard, and bringing such materials to market typically takes 10 to 20 years. That rate is far too slow for confronting the climate crisis, which the world must begin tackling in earnest by the end of the decade, according to the Intergovernmental Panel on Climate Change (IPCC), a global body of climate experts.

A new generation of experiments combining big data, artificial intelligence, and, increasingly, robotics could change the game. Some researchers believe these tools can discover materials that humans would never think of and dramatically cut the time needed to move a molecule or material from a dream to a product. The idea “is still in its early stages,” says George Crabtree, a materials scientist at Argonne National Laboratory in Illinois, who directs the Joint Center for Energy Storage Research (JCESR), but already it “has everyone talking.”

From Luck and Pluck to Inverse Design

Traditionally, scientists have discovered materials through a combination of intuition, pluck, and just plain luck. The approach led to many dead ends, but it also produced key innovations, including the components of the lithium-ion battery, which Crabtree calls “the best battery we’ve ever had.”

Starting in the late 20th century, computers enabled scientists to simulate structures and properties of molecules and materials and synthesize only the most promising ones, saving time and money. High-throughput screening further enabled the testing of dozens or hundreds of compounds in quick succession.

But these methods still face key limitations, namely, scientists’ imagination and understanding. The landscape of possible arrangements of elements in a molecule or crystal lattice is unimaginably vast, and scientists have studied only slivers of that landscape. “We’ve barely scratched the surface,” says Venkat Viswanathan, a materials scientist at Carnegie Mellon University in Pennsylvania.

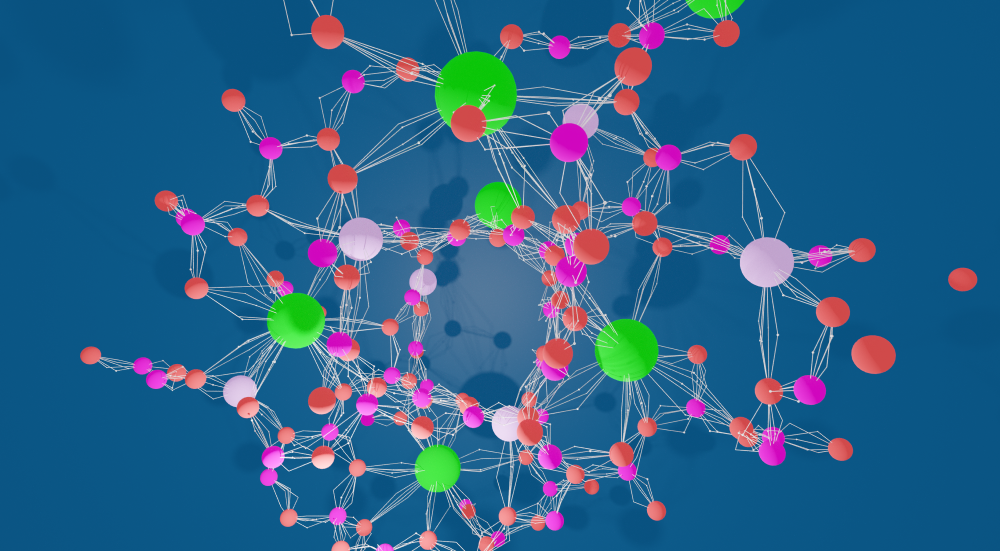

Enter artificial intelligence. AI methods such as machine learning can plumb enormous datasets for subtle correlations, or trends, that might elude humans. A familiar application is facial recognition, in which algorithms analyze millions of photos to learn how to spot subtle facial features that identify a person.

In a materials context, one can train a machine-learning algorithm to seek arrangements of atoms that yield a desired material property or function. Obtaining these structure-function correlations could allow “inverse design”—a long-held goal in materials science in which the optimum material can be found for a selected function.

There is a problem, however: Materials science lacks the massive training datasets that are available for facial recognition and other common AI applications. “Materials science is by design very sparse in data,” says Kristin Persson, a physicist at Lawrence Berkeley National Laboratory in California who runs the Materials Project, the world’s largest public materials database. Synthesizing and fully characterizing a material is painstaking and time consuming, so databases of materials often contain at most a few hundred entries for a particular material property.

But with larger troves of materials data rapidly coming online and new methods that can generate large volumes of data quickly, many researchers feel materials science is ripe for an AI revolution. Climate change could be an area where that revolution delivers big results.

Grid Solutions

Perhaps no technology is more critical to a low-carbon future than batteries. To meet electricity demands, public utilities are starting to add massive batteries to the electric grid to store solar and wind energy during peak production times and release the energy at night or during low-wind periods. But today’s lithium-ion batteries are poorly suited to this task: They can discharge optimally for only four to six hours, whereas in winter at high latitudes, utilities might need up to 16 hours of nighttime battery backup.

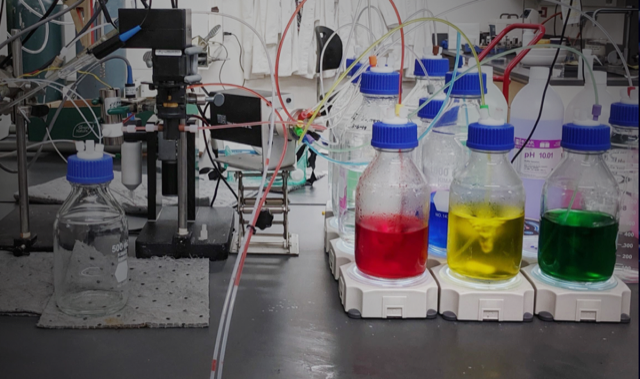

A new technology called flow batteries—in which redox reactions between chemicals stored in large tanks release electrons that flow through external circuits—is being investigated for grid-scale applications. Current state-of-the-art flow batteries are based on the element vanadium, which is prohibitively expensive for wide-scale deployment.

Some researchers hope cheaper organic compounds can replace vanadium in flow batteries. But most organics are chemically unstable, forcing researchers to sift through some 20 million known organic compounds, plus far more yet to be studied, to find rare, stable candidates. The massive design space makes the problem well suited to AI.

A few years ago, JCESR-funded researchers programmed a machine-learning algorithm to discern structure-function correlations from a large organic-molecule dataset and then predict a novel, ultrastable, flow-battery material. A University of Michigan researcher synthesized the material and found that it lasted 1000 times longer than previous organic flow-battery candidates. “That got our attention,” says JCESR director Crabtree, though he notes that the compound lacks the desired solubility—illustrating how battery materials must satisfy multiple demands simultaneously. The team is now applying AI to address the solubility challenge as well.

Bring in the Robots

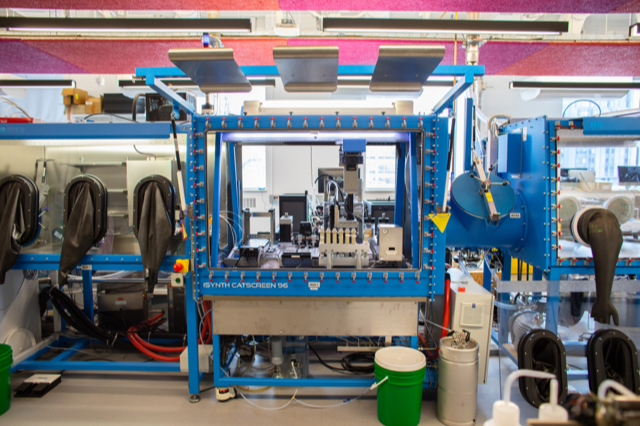

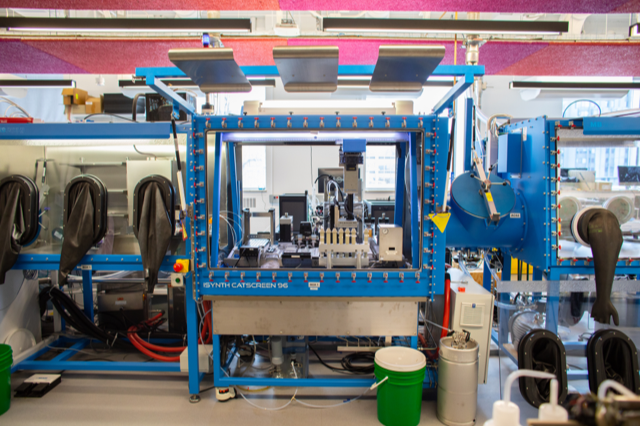

Another challenge AI enthusiasts face is that even when a machine-learning algorithm “discovers” a promising compound, it needs to be synthesized and tested, which can take years. A second innovation is starting to make headway: the self-driving or autonomous laboratory. In such labs, robots with access to molecular or chemical building blocks quickly synthesize and test compounds that AI suggests. The results feed back into the AI’s training dataset to generate even-better-informed suggestions.

Some materials scientists have decided this is the future of the field. “Two and a half years ago I jumped all in on this way of doing research,” Viswanathan says. Viswanathan’s passion is batteries for transportation. Recent battery improvements have increased electric vehicles’ ranges and enabled a nascent electric airplane industry to take off. But many researchers believe that lithium-ion-battery electrodes—whose energy density has roughly tripled over three decades—are nearing the maximum energy density possible with current materials. Without new battery materials, electric vehicles and aircraft will likely fall short of their full potential to decarbonize transportation.

To break this logjam, Viswanathan built a “self-driving laboratory” called Otto. He then tasked it with optimizing stability for a recently discovered class of electrolytes in which salt is dissolved in water at very high concentrations—a system for which no good theory exists to guide experimentation. In just 40 hours, the AI-robot combination had iteratively explored a space of some 1.2 million combinations of four salts and improved on the best known “water-in-salt” electrolyte, the team reported late last year. The result generated by the robot is a combination that human scientists would probably never have thought of, Viswanathan says. “We still don’t know clearly why it works, which is the kind of answer we were looking for.”

The automated experiment’s speed and its ability to find a counterintuitive solution are both “impressive,” says Crabtree, who wrote a commentary on the study. AI especially outperforms humans at exploring multidimensional spaces like the one Viswanathan created, he adds. But a commercially viable electrolyte would need further improvement. Viswanathan has since built a second-generation robotic lab, which he hopes will produce market-ready materials.

Accelerating Carbon Drawdown

While advances in batteries and other energy technologies could reduce future carbon dioxide emissions, there is also the problem of past emissions, which have already warmed the planet by more than . The IPCC has estimated that 100 billion tons of carbon dioxide need to be removed by 2100 to stabilize the climate. A 2011 American Physical Society report pegged the cost of direct air capture at $600 per ton, or $60 trillion for the IPCC’s goal—an impossible figure. Despite a decade of research and the launch of several carbon capture companies, that dollar figure has not come down appreciably.

Machine learning is starting to impact research into this vexing challenge. A key need is finding good chemical sorbents that can efficiently separate dilute carbon dioxide from other more abundant atmospheric gases, such as oxygen and nitrogen.

A promising class of compounds is metal-organic frameworks (MOFs)—porous materials that act as tiny sponges and can be tuned to adsorb specific molecules. Recently, researchers applied machine-learning techniques to the task of characterizing MOFs. “We got really good predictions from the machine learning,” says Krista Walton, a materials scientist at the Georgia Institute of Technology who coauthored the study. The method could aid future experimental efforts to optimize such materials, she says.

A related problem is what to do with the carbon dioxide after isolating it. Carnegie Mellon University chemical engineer Zachary Ulissi used machine learning to search for new catalysts that can convert carbon dioxide to ethylene, an important precursor for plastics.

Ulissi first generated a training dataset by applying density-functional theory (DFT), another powerful computational technique, to copper-containing catalysts in the Materials Project database. He then iteratively ran machine-learning codes to predict new catalysts. Ulissi eventually persuaded University of Toronto materials scientist Ted Sargent to synthesize and test the most promising compounds from the machine-learning predictions. The researchers reported in May 2020 that their best copper-aluminum alloy converted carbon dioxide to ethylene with greater than 80% efficiency, versus 66% for the pure copper catalysts used today.

Ulissi says catalysis R&D—which requires massive numbers of computations related to crystal structures and chemical reaction rates—is an ideal area for data-hungry machine-learning methods. “These tools really shine when there are millions or billions of possibilities,” he says.

Taming the Hype

Despite recent successes, some experts worry that AI could become overhyped. Data paucity, for one, remains a severe limitation. “In the end, machine learning is really just interpolation in multidimensional space,” says Persson, who warns students that they may need to spend a year generating a dataset with DFT or another method before crunching it with AI. “If you don’t have points in that multidimensional space, you’re not gaining anything.”

Deep learning—an increasingly popular technique in which computers seek correlations without initial parameters entered by humans—remains mostly out of reach for materials. Deep-learning algorithms require millions of data points; the Materials Project, one of the largest materials datasets, currently contains just over 144,000 inorganic compounds. So, for the foreseeable future, the materials design process will still require humans. And despite announcements that companies such as Boeing and BP are using AI in materials design, it’s not yet clear that any AI-designed materials have been commercialized—though companies won’t necessarily divulge when they have, Persson notes.

Accelerating Discovery

The next few years may show just how far AI can take materials science. Alán Aspuru-Guzik, a computational chemist at the University of Toronto, believes AI- and robotics-fueled self-driving laboratories could compress the discovery-to-commercialization timeline for materials by a factor of 10, to just one or two years.

Aspuru-Guzik and colleagues have recently used self-driving labs to study and optimize optical and electronic properties of materials for photovoltaics, organic LEDs, and other applications. Other research groups have used machine learning and robotics to investigate nearly 2000 perovskites, a class of materials thought to hold promise for solar cells. “It’s not just BS,” says Aspuru-Guzik. “The examples are there.”

Both he and Viswanathan admit, however, that self-driving labs haven’t yet found their killer app. Aspuru-Guzik is now launching what he calls the Acceleration Consortium, which will, among other things, enable researchers from outside universities and companies to run experiments in autonomous labs housed at the University of Toronto. With the money spent and the labs built, it’s time to prove the idea can deliver, he says. “The clock is ticking.”

–Gabriel Popkin

Gabriel Popkin is a freelance science writer in Washington, DC.