Biological Attacks Have Lessons for Image Recognition

The human immune system must distinguish the body’s own cells from invaders. Likewise, standard facial recognition software must distinguish the target face from all the others. A new mathematical model demonstrates this analogy, showing that a well-known strategy for outsmarting the immune system is akin to a standard way to fool a pattern recognition system. The model’s creators say that the fields of machine learning and immunology could each benefit from methods that work in the other field.

Every time an immune cell encounters a new biomolecule (“ligand”), such as the protein coating of a virus, it must categorize the molecule and determine the appropriate response. But the system isn’t perfect; HIV, for example, can avoid setting off an immune response. “In immunology, you have unexpected ways of fooling immune cells,” says Paul François, of McGill University in Canada. He realized that there is a similarity between the way certain pathogens outsmart the immune system and the way a sophisticated hacker can fool image recognition software.

One way an immune cell distinguishes among molecules is by the length of time that they remain bound to a receptor on the cell’s surface. “Stickier” molecules are more likely to be foreign. One strategy for pathogens involves overwhelming immune cells with borderline molecules called antagonists that bind to receptors and have an intermediate stickiness. Surprisingly, this condition can reduce the likelihood that a cell will activate an immune response even against obvious foreign molecules.

Artificial neural networks—computer programs that in some ways mimic networks of brain cells—can be used for image recognition after they’ve been trained with a wide range of image data. But a carefully constructed and nearly imperceptible modification of an image could make a picture that appears to human eyes as a panda to be misclassified as a gibbon, for example.

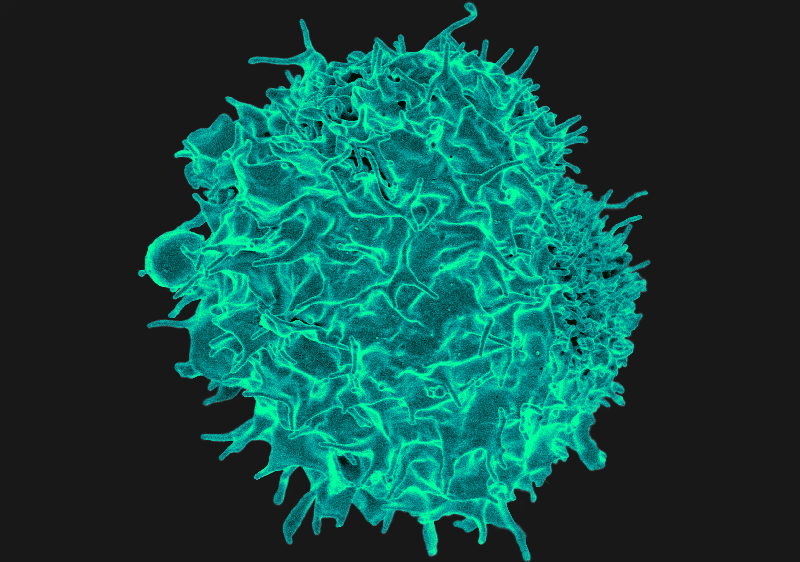

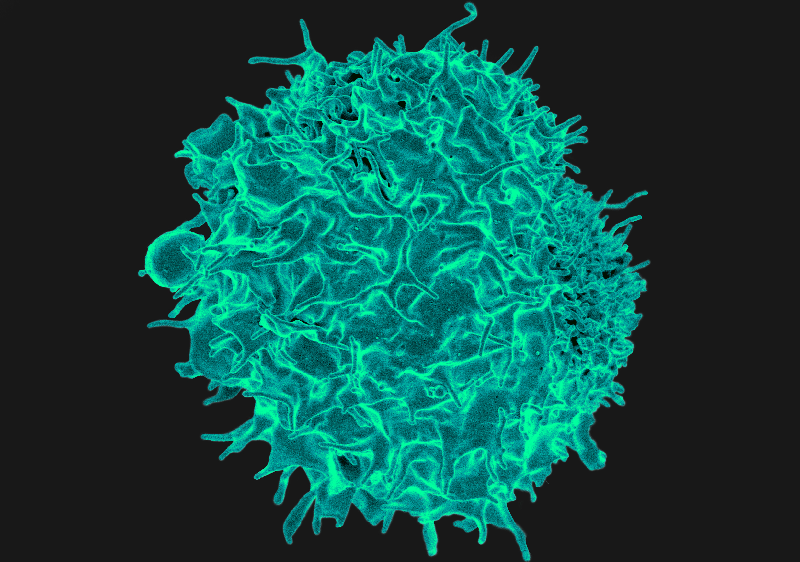

In both of these cases, an attacker tries to cause a sorting system to misclassify an object. To formally demonstrate the analogy, François and his colleagues developed a mathematical model of decision making by a type of immune cell called a T cell. In the model, which is a variation on previous models, the cell’s many receptors are exposed to numerous ligands having a range of stickiness values. Next, the model assumes that a series of biochemical reactions results from the binding and unbinding events at the receptors, which leads to a score—a number that determines whether the cell will activate an immune response or not. (Biochemically, the score is the concentration of a certain biomolecule.) The biochemical reactions include both “gas pedals,” which raise the score and make an immune response more likely, and “brakes,” which reduce the score.

The team compares this model with a neural-network-based pattern recognition system that can distinguish a handwritten 7 from a 3. The input to this system is an unknown image containing a set of pixel-brightness values analogous to the initial distribution of ligands in the biological case. The neural net generates a score from its input and uses it to determine whether the image is a 7 or a 3.

The researchers show that a standard mathematical procedure for generating a slightly perturbed image that will fool a pattern recognition system translates directly to the biological model. In the model for T cells, this perturbed image corresponds to the antagonist strategy reducing the likelihood of an immune response. The team also demonstrates a defense strategy for the neural network case inspired by the biological case. An antagonist defense known from a similar biological model is to reduce the weight of weakly bound ligands when calculating the score, so that these ligands would be less effective antagonists. This strategy translates to setting some of the weak background pixels to zero, and the team showed that it is effective at thwarting attempts to fool the neural net.

Biophysicist David Schwab of the City University of New York says that new approaches to thwart attacks against pattern recognition systems are important because such attacks can have real consequences. For example, Tesla engineers realized that there was a marker that they could put in the road that made their self-driving car change lanes automatically. Similarly, a sticker strategically placed on a stop sign could fool it into perceiving the wrong symbol, which could have deadly consequences.

This research is published in Physical Review X.

–Ramin Skibba

Ramin Skibba is a freelance science writer in San Diego.