Extended Viewing with Glasses-Free 3D

Watching a superhero film in an immersive 3D format seems like a thoroughly modern experience. But in reality, 3D glasses have been around since the mid-1800s. The technology has constantly improved over its 150 years of existence, but it may finally be mature enough to lose the funky glasses.

Glasses-free 3D displays have been demonstrated within the past few years, but they typically require the viewer to stand at a specific distance from the screen. Now, Wen Qiao and her colleagues from Soochow University in China have developed a new prototype lens that can extend the viewing range—or “depth of field”—beyond what is currently available [1]. The flat-shaped lens consists of an array of nanostructures that projects multiple angles of an image towards the viewer. In years to come, they predict that this design could allow 3D video conferences for remote working. The technology could also make online shopping more like in-person shopping. “When consumers browse an online store, the 3D display system helps them make decisions by virtually reconstructing the merchandise with high fidelity,” Qiao says.

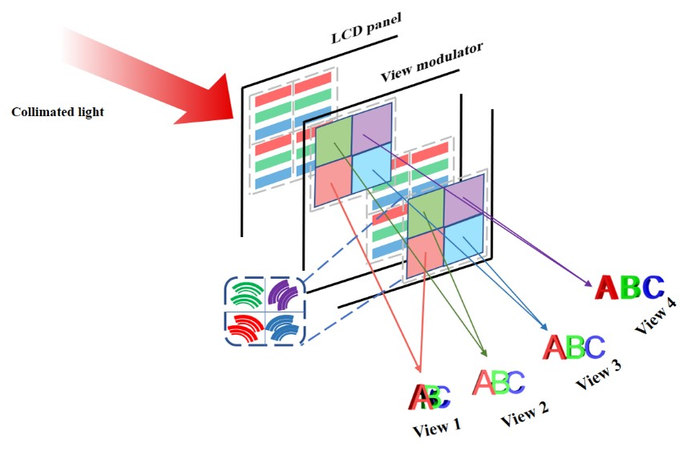

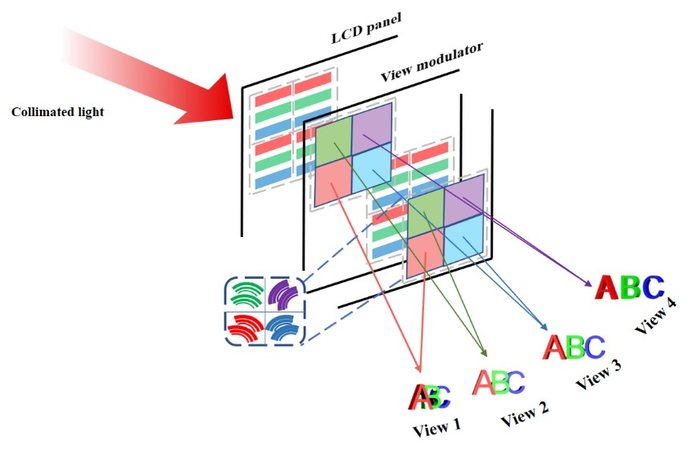

In a movie theater, a 3D film combines two images of a scene, each taken from a slightly different angle. The 3D glasses that you wear filter the two images for your eyes so that you see a single, multidimensional image. For example, the light for one image might be polarized horizontally while the other is polarized vertically, and each lens in the glasses would select just one polarization. However, unlike movie goers who stare straight ahead at a screen, viewers of a 3D display are not always viewing the display from the same angle. As a result, the device needs more than two images—typically four or more perspectives of a scene are recorded and projected at different angles towards the viewer. (This multiview—or stereographic—approach is distinct from a holographic projection, which uses a laser-recording process and interference effects to create 3D images.)

One of the standard methods for projecting multiview images is to use an array of microlenses that focus each view at a specific distance from the device. Such arrays are limited by two major factors: focal length, which can limit the viewing distance at which they remain 3D to around 30 cm, and crosstalk, in which light leaking between viewing angles distorts the images and causes visual fatigue. Qiao and colleagues have made progress toward solving these issues by developing a lens that is etched into a flat, 100- 𝜇m-thick membrane. “We carefully designed a new diffractive flat lens by patterning nanostructures onto a flat surface in a way that focuses light,” Qiao explains. The nanostructures are sawtooth-shaped grooves that control the bending of light by their spacing.

The team fabricated a four-inch prototype lens, which was mounted in front of a liquid-crystal display (LCD) panel. In trials, the researchers generate four different views of an object, such as a string of letters and a cube. The four views are displayed simultaneously by the LCD, with one out of four pixels devoted to each view. The team’s lens focuses the light rays from each view in a different direction. All viewed together, the 3D image takes shape at viewing distances between 24 cm and 90 cm. The team measured the amount of crosstalk to be below 26% percent—compared to 40% or more in microlens arrays.

Optics expert Pierre-Alexandre Blanche from the University of Arizona says that one benefit of Qiao and colleagues’ design is that their diffractive lens has multiple focal distances. “The demonstration shows that the 3D rendering can be better with nanostructures than with conventional optics such as microlens arrays,” Blanche says.

The team’s prototype currently has a viewing angle of only 9 degrees, but Qiao hopes to extend this in the future to a full 180 degrees. To advance this work, the team will need to improve fabrication techniques for their nanostructures and develop new algorithms for calculating the nanostructure patterns that focus the light of each pixel.

–Sarah Wells

Sarah Wells is an independent science journalist based outside of Washington, DC.

References

- F. Zhou et al., “Vector light field display based on an intertwined flat lens with large depth of focus,” Optica 9, 288 (2022).