Reservoir Computing Speeds Up

Light-based (photonic) technologies offer many benefits when it comes to building a computer: they are efficient, have high bandwidths, and deliver fast processing speeds. But progress in photonics-based computing has almost always been outpaced by advances in semiconductor electronics, which make up the logical elements in today’s computers. Where photonics does seem to be making headway is with alternative computation schemes, which solve problems differently than conventional transistor-based (binary) computers. One example of an alternative scheme is reservoir computing, which uses interconnected devices to mimic the neuronal architecture of the brain. Laurent Larger, of the French National Center for Scientific Research (CNRS) and the University of Burgundy Franche-Comté, and co-workers [1] have taken a photonics-based reservoir computer design and refitted part of it with optoelectronic components to achieve a threefold increase in processing speed. Their computer, which is built with standard telecommunication devices, is capable of recognizing one million spoken words per second and lends itself to being integrated into a chip.

Like several other brain-inspired approaches, reservoir computing [2, 3] relies on an artificial neural network that relays an input signal to different neuron-like units to produce a useful output. In a reservoir computer, the network consists of an internal part, or reservoir, that’s made of many interconnected units and a readout layer that both communicates with the reservoir and delivers an output. The reservoir units are nonlinear (and analog) in their response to a signal, and the connections between them—how much one unit’s response affects another’s—can be of different strengths. Importantly, the connected units form so-called recurrent loops—closed loops in which a signal travels between the units in a certain order and with a slow decay time, like an echo. This feature allows the reservoir to store information about the past and to process a signal differently depending on which signals came before it. Such contextual information is what allows the computer to “learn,” for example, that when hearing the sounds for the letters S, E, V, E, and N, a V comes after E and S to spell SEVEN.

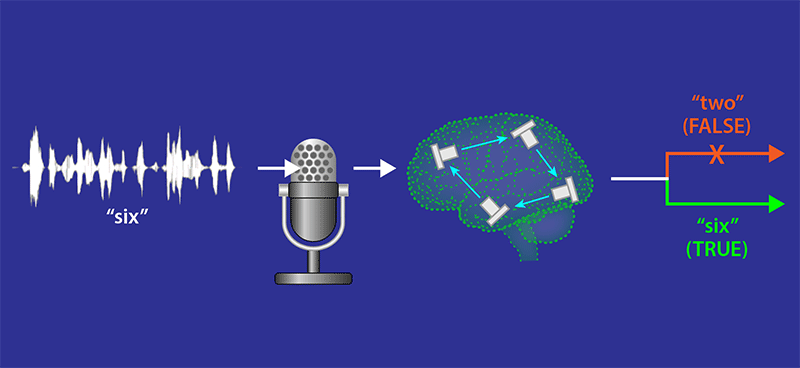

Reservoir computers must go through a “training” process, in which the connection strengths are optimized to produce a desired outcome (Fig. 1). How these strengths are set distinguishes reservoir computing from traditional artificial neural networks used in machine learning. Specifically, the connections between the reservoir units, and between the input information and the reservoir, are initialized with random strengths and left unchanged. The only things that change during the training process are the strengths of the connections between the reservoir and the readout layer.

A reservoir computer’s ability to solve complex tasks is typically guaranteed by having a large number of nonlinear units in the reservoir. But an alternative is to use just one nonlinear unit that’s subject to what’s called a delay feedback loop [4]. This entails delivering a signal in a series of designated time slots (multiplexing) to create a “virtual” multiunit reservoir. Fewer units in the reservoir mean fewer connections, so time multiplexing greatly simplifies the hardware requirements. This has allowed the development of several all-optical reservoir computers [5, 6] and might contribute to the way other machine learning algorithms are implemented.

Taking this multiplexing approach, Larger and co-workers have built a reservoir computer with both optical and electronic elements and demonstrated that it can recognize speech samples at a fast rate [1]. The machine takes a digitized sound wave, converts it into an optical signal, and then processes this input in the reservoir. The response from the reservoir is then further processed to produce a word. The team tested their system using voice recordings from two standard databases: numerical digits spoken by 10 different females (from the TI-46 database) and numerical digits voiced by 326 different men, women, and children (from the AURORA-2 database). The authors showed that their machine output the incorrect digit just 0.04% of the time for the TI-46 data and 8.9% of the time for the more complex AURORA-2 data, respectably low error rates. They also demonstrated that their optoelectronic system processes information 3 times faster than the fastest all-optical reservoir computers. A key to achieving this speedup was that they encoded the sound wave information in the phase of the optical signal, while earlier approaches encoded this information in the signal’s intensity [7, 8]. (See note in Ref. [9].)

In addition to this speedup, the reservoir computer demonstrated by Larger et al. has a number of attractive features. All of its components—lasers, electro-optic modulators, and photodiodes—can be packed into a photonic integrated chip. In addition, the authors’ implementation doesn’t require a strict timing between injecting the input signal and reading the output (as do other reservoir computers that use time multiplexing) and actually works most efficiently when the input and readout are asynchronous. Finally, the time multiplexing approach allows the authors to increase the number of connections between the input and the reservoir from one (the usual number) to three, which is beneficial for speech processing. Altogether, the work by Larger et al. [1] represents a significant improvement in the hardware for reservoir computing.

The field of reservoir computing is rapidly growing, with physicists, engineers, computer scientists, neuroscientists, and mathematicians working hand in hand to develop the concept and explore applications. Finding the right hardware to interface the digital and analog sides of the computer, and to shield the system from noise, remains a challenge. And reservoir computing is still in the exploratory stages. Besides speech recognition, the computing approach has tested successfully for such applications as processing radar signals and removing distortion from transmitted signals (channel equalization). For these technologies, photonics-based reservoir computers are at the forefront. Current devices have processing speeds of 20 gigabytes per second (GB/s), and should soon reach 100 GB/s, a standard speed for network connections. The next step would be to achieve this high processing speed in a miniaturized device that can be integrated with other photonic elements and that uses passive devices to reduce power consumption, as demonstrated in Ref. [10]. In this context, the multimillion-euro funding of the European Commission through projects such as NARESCO, PHOCUS, and PHRESCO has provided a huge push.

This research is published in Physical Review X.

References

- L. Larger, A. Baylón-Fuentes, R. Martinenghi, V. S. Udaltsov, Y. K. Chembo, and M. Jacquot, “High-speed Photonic Reservoir Computing Using a Time-Delay-Based Architecture: Million Words Per Second Classification,” Phys. Rev. X 7, 011015 (2017).

- W. Maass, T. Natschläger, and H. Markram, “Real-Time Computing Without Stable States: A New Framework for Neural Computation Based on Perturbations,” Neural Comput. 14, 2531 (2002).

- H. Jaeger and H. Haas, “Harnessing Nonlinearity: Predicting Chaotic Systems and Saving Energy in Wireless Communication,” Science 304, 78 (2004).

- L. Appeltant, M.C. Soriano, G. Van der Sande, J. Danckaert, S. Massar, J. Dambre, B. Schrauwen, C.R. Mirasso, and I. Fischer, “Information Processing Using a Single Dynamical Node as Complex System,” Nature Commun. 2, 468 (2011).

- D. Brunner, M. C. Soriano, C. R. Mirasso, and I. Fischer, “Parallel Photonic Information Processing at Gigabyte Per Second Data Rates Using Transient States,” Nature Commun. 4, 1364 (2013).

- Q. Vinckier, F. Duport, A. Smerieri, K. Vandoorne, P. Bienstman, M. Haelterman, and S. Massar, “High-Performance Photonic Reservoir Computer Based on a Coherently Driven Passive Cavity,” Optica 2, 438 (2015).

- Y. Paquot, F. Duport, A. Smerieri, J. Dambre, B. Schrauwen, M. Haelterman, and S. Massar, “Optoelectronic Reservoir Computing,” Sci. Rep. 2, 287 (2012).

- L. Larger, M. C. Soriano, D. Brunner, L. Appeltant, J. M. Gutiérrez, L. Pesquera, C. R. Mirasso, and I. Fischer, “Photonic Information Processing Beyond Turing: An Optoelectronic Implementation of Reservoir Computing,” Opt. Express 20, 3241 (2012).

- There are other routes to achieving this speedup in machines that, like the one built by Larger et al., treat time as a continuous quantity as opposed to a discrete quantity.

- K. Vandoorne, P. Mechet, T. Van Vaerenbergh, M. Fiers, G. Morthier, D. Verstraeten, B. Schrauwen, J. Dambre, and P. Bienstman, “Experimental Demonstration of Reservoir Computing on a Silicon Photonics Chip,” Nature Commun. 5, 3541 (2014).