Learning Language Requires a Phase Transition

A long-standing puzzle in linguistics is how children learn the basic grammatical structure of their language, so that they can create sentences they have never heard before. A new study suggests that this process involves a kind of phase transition in which the “deep structure” of a language crystallizes out abruptly as grammar rules are intuited by the learner. At the transition, a language switches from seeming like a random jumble of words to a highly structured communication system that is rich in information.

American linguist Noam Chomsky of the Massachusetts Institute of Technology famously proposed that humans are born with an innate knowledge of universal structural rules of grammar. That idea has been strongly criticized, but it remains puzzling how these rules become understood.

In all human languages, the relationships between the words and the grammatical rules governing their combination form a tree-like network. For example, a sentence might be subdivided into a noun phrase and a verb phrase, and each of these in turn can be broken down into smaller word groupings. Each of these subdivisions is represented as a branching point in a tree-type diagram. The “leaves” of this tree—the final nodes at the branch tips—are the actual words: specific instances of generalized categories like “noun,” “verb,” “pronoun,” and so on. The simplest type of such a grammar is called a context-free grammar (CFG), the kind shared by almost all human languages.

Physicist Eric DeGiuli of the École Normale Supérieure in Paris proposes that CFGs can be treated as if they are physical objects, with a “surface” consisting of all possible arrangements of words into sentences, including, in principle, nonsensical ones. His idea is that children instinctively deduce the “deep” grammar rules as they are exposed to the tree’s “surface” (sentences they hear). Learning the rules that allow some sentences but not others, he says, amounts to the child assigning weights to the branches and constantly adjusting these weights in response to the language she hears. Eventually, the branches leading to ungrammatical sentences acquire very small weights, and those sentences are recognized as improbable. These many word configurations, DeGiuli says, are like the microstates in statistical mechanics—the set of all possible arrangements of a system’s constituent particles.

In a CFG where all the weights are equal among all of the nodes, all possible sentences are equally likely, and the language is indistinguishable from random word combinations, carrying no meaningful information. The question is, among all possible CFGs, what kind of weight distributions distinguish CFGs that produce random-word sentences from those that produce information-rich ones?

DeGiuli’s theoretical analysis—which uses techniques from statistical mechanics—shows that there are two key factors involved: how much the weightings “prune” branches deep within the hierarchical tree, and how much they do so at the surface (where specific sentences appear). In both cases, this sparseness of branches plays a role analogous to a temperature in statistical mechanics. Lowering the temperature both at the surface and in the interior means reducing more of the weights.

As the deep temperature is lowered—meaning the tree interior becomes sparser—DeGiuli sees an abrupt switch from CFGs that are random and disorderly to ones that have high information content. This switch is a phase transition analogous to the freezing of water. He thinks that something like this switch may explain why, at a certain stage of development, a child learns very quickly how to construct grammatical sentences.

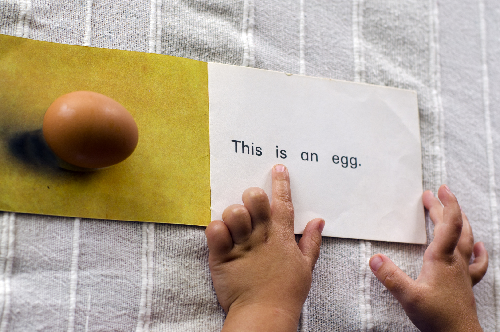

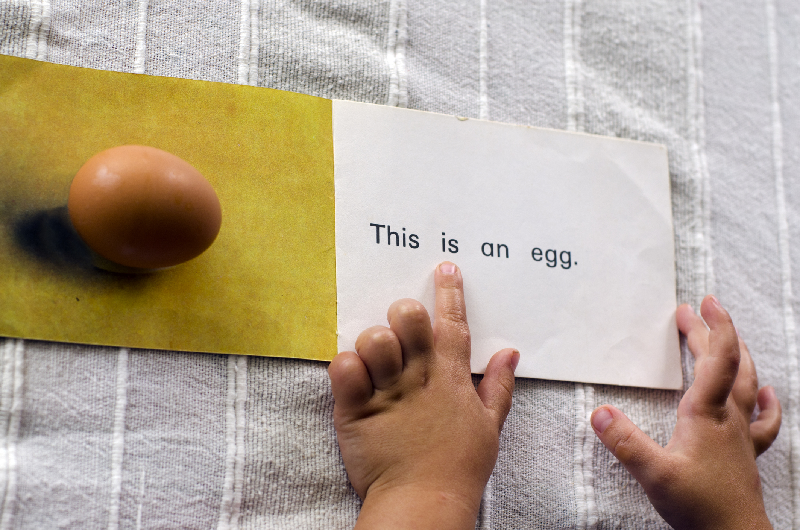

At that point, words cease to be mere labels and instead become the ingredients of sentences with complex structures and meanings. This transition doesn’t depend on getting all the right weightings; children continue to refine their understanding of language. The use of inductive and probabilistic inference in DeGiuli’s theory is consistent with what is observed in children’s language acquisition [1].

DeGiuli hopes that this abstract process can ultimately be connected to observations at the neurological level. Perhaps researchers could learn what might inhibit the transition to rich language for children with learning disabilities.

“The question of how a child manages to extract a grammar from a series of examples is widely debated, and this paper suggests a mechanism for how it is possible,” says statistical physicist Richard Blythe of Edinburgh University in the UK. “I think this is a very intriguing idea, and it could, in principle, make quantitative predictions. So it may have a chance of being put to the test.”

This research is published in Physical Review Letters.

–Philip Ball

Philip Ball is a freelance science writer in London. His latest book is How Life Works (Picador, 2024).

References

- C. Yang, S. Crain, R. C. Berwick, N. Chomsky, and J. J. Bolhuis, “The growth of language: Universal Grammar, experience, and principles of computation,” Neurosci. Biobehav. Rev. 81, 103 (2017).