Information Flow in Molecular Machines

Molecular machines perform mechanical functions in cells such as locomotion and chemical assembly, but these “tiny engines” don’t operate under the same thermodynamic design principles as more traditional engines. A new theoretical model relates molecular-scale heat engines to information engines, which are systems that use information to generate work, like the famous “Maxwell’s demon” [1]. The results suggest that a flow of information lies at the heart of molecular machines and of larger heat engines such as thermoelectric devices.

The prototypical engine is a steam engine, in which work is produced by a fluid exposed to a cycle of hot and cold temperatures. But there are other engine designs, such as the bipartite engine, which has two separate parts held at different temperatures. This design is similar to that of some molecular machines, such as the kinesin motor, which carries “molecular cargo” across biological cells. “Bipartite heat engines are common in biology and engineering, but they really haven’t been studied through a thermodynamics lens,” says Matthew Leighton from Simon Fraser University (SFU) in Canada. He and his colleagues have now analyzed bipartite heat engines in a way that reveals a connection to information engines.

An engine that runs on information may sound far-fetched. Indeed, the idea comes from Maxwell’s demon, a small character in a 19th-century thought experiment who can separate hot molecules from cold molecules in a gas. On the face of it, such sorting would violate the second law of thermodynamics because it would reduce entropy (lower disorder) without expending energy. In reality, no violation occurs; careful accounting reveals that the demon’s information collecting requires a hidden cost of work. This idea establishes a fundamental connection between information and energy.

Motivated by this thought experiment, researchers have built devices that extract energy from a thermal system using demon-like observations of thermal fluctuations (see Viewpoint: Exorcising Maxwell’s Demon). But whether information-driven machines exist “in the real world” outside the laboratory remains an open question, says team member David Sivak of SFU. The researchers believe that they’ve now shown such a manifestation in bipartite heat engines.

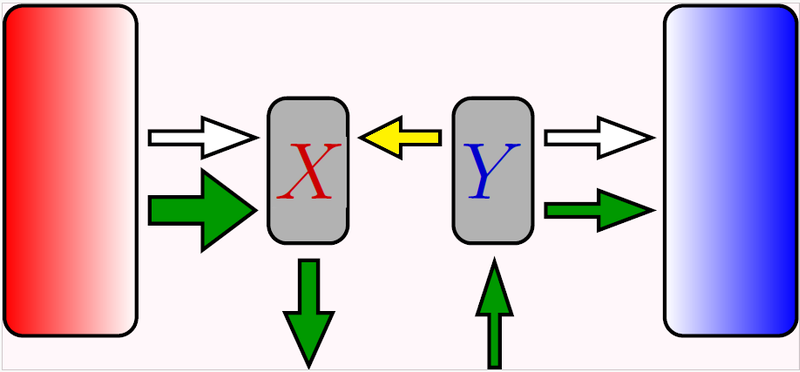

They began by working out the thermodynamics of a bipartite heat engine. They labeled the two parts of the engine X and Y, with X in contact with a hot reservoir and Y in contact with a cold reservoir. Heat flows into X and out of Y, and each part experiences a continuous loss or gain of entropy associated with the heat flow.

For the engine to produce energy in the form of work, the researchers showed that there must be a particular type of entropy exchange between X and Y, referred to as information flow. The information here is a correlation between the possible microscopic states of X and Y. This correlation is zero if the states of X and Y are entirely independent, but if, for example, you can determine X’s state by measuring Y, then the correlation is not zero. From this model, the researchers derived an inequality—similar to the second law of thermodynamics—expressing the engine’s work output in terms of a flow of information between X and Y.

Sivak admits that an information flow in a heat engine seems counterintuitive. “The more conventional picture is one with an energy flow between subsystems, which you can imagine as a piston being pushed or a gear being turned,” he says. But the team’s analysis of the bipartite heat engine shows that such mechanical action is not enough to produce work. There must also be information exchange.

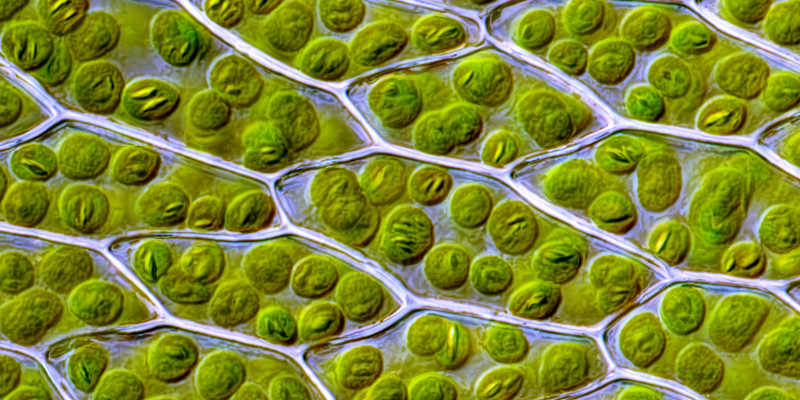

The researchers apply their information-engine framework to several examples of bipartite heat engines, such as photosystem II, a molecular machine that harvests sunlight for the splitting of water molecules in plant cells [2]. The machine can be divided into two parts: one part that is in contact with an effectively hot reservoir of photons from the Sun and another part that is in contact with an effectively cold reservoir of the surrounding cell. According to their theory, information should flow between these two parts at a rate no less than 2000 bits per second.

The researchers don’t know what kind of information flows between the two parts of the molecule. Leighton speculates that it could be a correlation between photon-absorbing states and chemical-reacting states within the machine. The team explored this possibility using a simplified model of photosystem II’s electronic and chemical states, finding evidence of information exchange in the calculated population statistics of the states.

The information-engine framework may extend beyond molecular machines, as the bipartite design also applies to thermoelectric devices, which convert a temperature difference into a voltage difference. The team predicts that an information flow is occurring inside these devices, presumably in the form of correlations between voltage and current fluctuations.

The researchers make a convincing case that their model applies to a plethora of biological and human-made machines, says Édgar Roldán, an information-engine expert from the Abdus Salam International Center for Theoretical Physics in Italy. The new framework will allow other researchers to infer the minimal amount of information transmission needed per unit time among different subsystems of a molecular machine, he says, which is “the most important application of this work.”

–Michael Schirber

Michael Schirber is a Corresponding Editor for Physics Magazine based in Lyon, France.

References

- M. P. Leighton et al., “Information arbitrage in bipartite heat engines,” Phys. Rev. X 14, 041038 (2024).

- J. Barber, “The engine of life: Photosystem II,” Biochem (Lond) 28, 7 (2006).