Measuring Entropy Production at the Mesoscale

Over the last three decades, statistical physics has gone from being able to describe systems in, and close to, equilibrium to being able to describe certain classes of far-from-equilibrium systems. Specifically, an exact thermodynamic framework was developed, defining entropy production, applied work, and heat exchange in small nonequilibrium systems submerged in a thermal bath. Prototypical examples of such systems are molecular motors or the receptors responsible for cellular sensing, in which transition rates between different well-defined steady states can be fully characterized [1]. Recently, however, researchers have started to consider larger—mesoscopic and macroscopic—nonequilibrium systems, such as cells, tissues, and entire organisms, for which it is impossible to identify all of a system’s microscopic states. To tackle this problem, Dominic Skinner and Jörn Dunkel of the Massachusetts Institute of Technology developed a mesoscopic model that defines a lower bound on energy consumption by using an experimentally accessible parameter. This parameter is the waiting time distribution of transitions between the so-called metastates—the coarse-grained, observable steady states of the system [2]. As such, the model provides a route to directly measuring the extent to which a mesoscopic system is out of equilibrium.

According to classical thermodynamics, no system—not even an ideal frictionless one—can transform all its heat into work, which limits the efficiency of thermodynamics-based devices, such as heat engines. The measure that indicates the availability of thermal energy for mechanical work is entropy, which, according to the second law of thermodynamics, is produced at a positive rate by every irreversible process. As such, entropy production quantifies the “cost” of keeping a system in a nonequilibrium steady state. Complementary facets of the second law are the necessity of energy dissipation in finite-time processes and the lack of dissipation in quasistatic systems, two effects that are recovered by linear response theory [3].

Life, however, is associated with processes such as growth, self-organization, maintenance, and aging that occur far beyond the linear response. Nonetheless, the rates at which energy is consumed and entropy is produced while sustaining life are limited. As such, they reflect, for example, the thermodynamic cost of living and hence determine the capacity of an organism to survive by sensing the environmental conditions and adapting to changes in its surroundings [4].

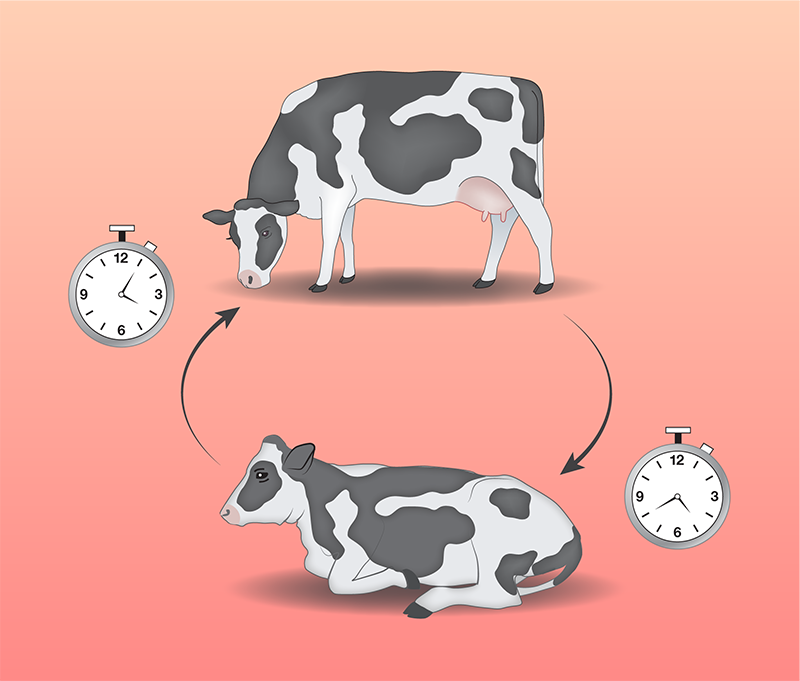

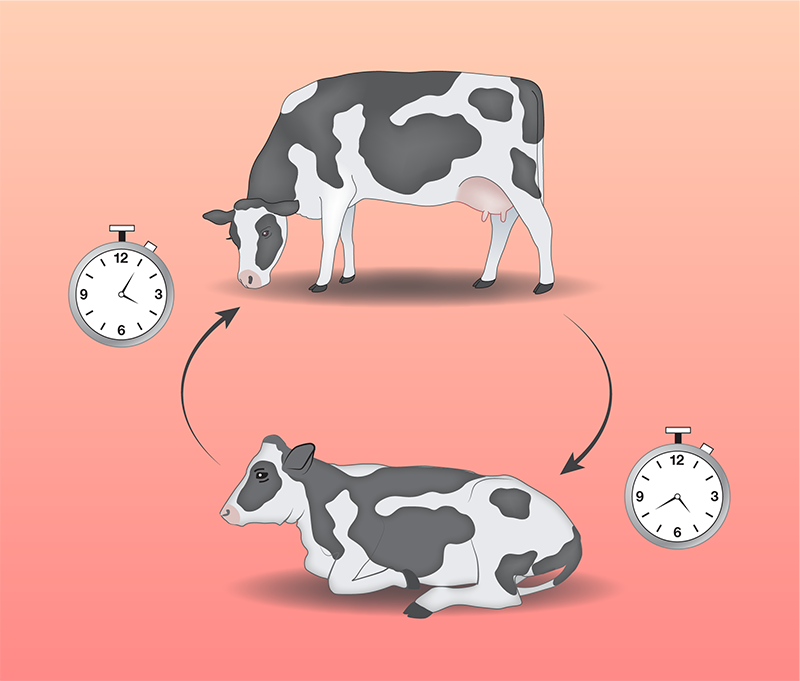

In their model, Skinner and Dunkel consider a mesoscale system in contact with a heat bath. Rather than focusing on transitions between microscopic steady states, they use a coarse-grained approach to calculate how the system evolves between two metastates. For a cow, this state can be as general as standing or sitting, for example. The theory relies on measuring the variance of the time that the system spends in each of those two states. Through optimization techniques, they then use those statistics to calculate the lower bound for entropy production. Their new expression complements the recently introduced thermodynamic uncertainty relation, which also uses the waiting time distributions for transitions between coarse-grained nonequilibrium steady states to calculate the bound on entropy production [5].

To demonstrate the usefulness of their approach, Skinner and Dunkel applied it first to active sensors, such as those used by cells to monitor the concentrations of chemicals in their environment. These sensors can increase their sensing accuracy by expending energy, overcoming the equilibrium sensing limit [6]. By calculating explicitly the entropy production rate, which is possible in this simple system, the duo finds that their predicted lower bound produces a good estimate for small and intermediate entropy production rates outperforming the thermodynamic uncertainty relation. Furthermore, they confirm that much larger variances can be generated with much smaller energy consumption rates if additional microscopic states are introduced.

Skinner and Dunkel then apply their model to already published data on energy consumption in experimental systems ranging from gene regulatory networks to cows, where microscopic steady states are clearly out of reach. They demonstrate that a meaningful insight into the energy consumption can be obtained without an understanding of the underlying determinants of the states of these systems. Finally, they also consider data from “precise timers” that exhibit small variances in their ticks, such as heart beats. In this case, it is not possible to numerically estimate the entropy production rate, so Skinner and Dunkel derive an analytical expression that relates the variance in beating time to the entropy production rate in the limit of infinite precision. The duo shows that the entropic cost of maintaining a heart’s beating time decreases roughly linearly with increasing variance in the normalized waiting time distribution of the beats: Or put another way, the data imply that it is less costly to maintain the heartbeat of a young person than it is to maintain that of an old one.

These examples were chosen to emphasize the ease of use of their approach to analyze experimental data and to highlight the theory’s applicability, accuracy, and its lack of assumptions on the system’s underlying microscopic states. The chosen examples also demonstrate the versatility of the model: In many systems, only the coarse-grained states can be fully characterized—the cow is either standing or lying down. The model nonetheless provides a reasonable estimate for an entropy production rate as a function of the cow’s living conditions.

Thanks to the model, which performs reasonably even with limited data, it is now truly a simple exercise to obtain an estimate of the entropy production rate of a mesoscopic system. It is also now possible to consider entropy production rate as a parameter of the evolutionary design of a living organism, which is an exciting possibility. A natural next step is to extend the theory to address the time evolution of a system’s steady state as it ages. Tools like the one developed here by Skinner and Dunkel set the foundation for understanding what limits the sensing and adaptation of a living system, a perspective that will likely have far-reaching consequences.

References

- U. Seifert, “Stochastic thermodynamics, fluctuation theorems and molecular machines,” Rep. Prog. Phys. 75, 126001 (2012).

- D. J. Skinner and J. Dunkel, “Estimating entropy production from waiting time distributions,” Phys. Rev. Lett. 127, 198101 (2021).

- P. Nazé and M. V. S. Bonança, “Compatibility of linear-response theory with the second law of thermodynamics and the emergence of negative entropy production rates,” J. Stat. Mech. 2020, 013206 (2020).

- D. J. Skinner and J. Dunkel, “Improved bounds on entropy production in living systems,” Proc. Natl. Acad. Sci. 118, e2024300118 (2021).

- S. E. Harvey et al., “Universal energy accuracy tradeoffs in nonequilibrium cellular sensing,” arXiv:2002.10567.

- H. C. Berg and E. M. Purcell, “Physics of chemoreception,” Biophys. J. 20, 193 (1977).