Quantum-Aided Machine Learning Shows Its Value

Machine learning allows computers to recognize complex patterns such as faces and also to create new and realistic-looking examples of such patterns. Working toward improving these techniques, researchers have now given the first clear demonstration of a quantum algorithm performing well when generating these realistic examples, in this case, creating authentic-looking handwritten digits [1]. The researchers see the result as an important step toward building quantum devices able to go beyond the capabilities of classical machine learning.

The most common use of neural networks is classification—recognizing handwritten letters, for example. But researchers increasingly aim to use algorithms on more creative tasks such as generating new and realistic artworks, pieces of music, or human faces. These so-called generative neural networks can also be used in automated editing of photos—to remove unwanted details, such as rain.

As Alejandro Perdomo-Ortiz of Zapata Computing in Toronto notes, including quantum computing into today’s generative networks could lead to much better performance. So researchers have been trying to implement algorithms on the current generation of so-called noisy intermediate-scale quantum devices—rudimentary quantum machines with less than about 50 quantum bits (qubits).

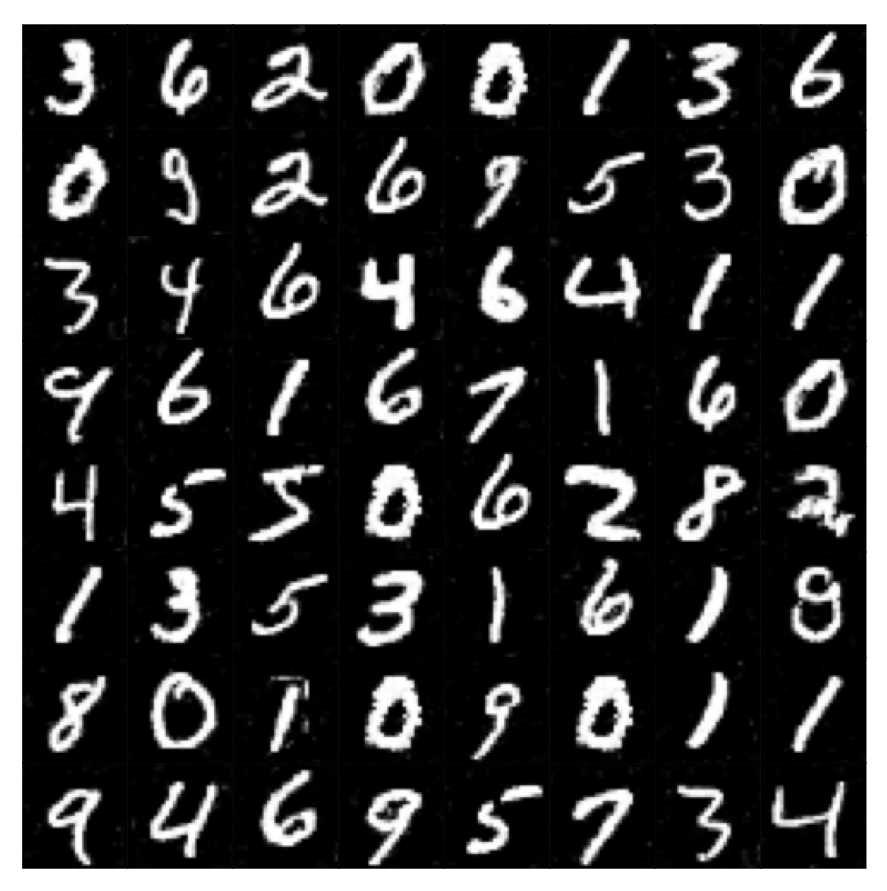

“There has been growing interest in applying quantum-circuit-based algorithms in generative modeling,” says Perdomo-Ortiz, but success so far has been limited. In generating realistic handwritten digits—a standard benchmark in the field—previous studies have only managed small, grainy, low-resolution figures barely resembling those of the training set. Now he and his colleagues report improved results with a new machine-learning architecture.

Their work exploits a so-called adversarial network—a type of neural network built from two subnetworks, a generator and a discriminator. The generator network learns to produce realistic images by starting with an initial seed distribution of probabilities for different images—often chosen with equal probabilities for all images—and then learning by trial and error. It gradually adjusts to give high probabilities to images it generates that are similar to those in the training data.

The discriminator network plays the role of an adversary that tries to distinguish fake images produced by the generator from real training images. Based on the discriminator’s performance, the generator then adjusts itself to be able to fool the discriminator more effectively by producing more realistic images. The discriminator, in turn, adjusts to better spot fakes. In essence, the generator learns to perform better by being tested against an opponent that is, meanwhile, learning to be the best adversary.

But the effectiveness of such adversarial networks depends on the starting seed distribution of probabilities for the images. To improve system performance, Perdomo-Ortiz and colleagues decided to augment this adversarial network with a quantum circuit designed to bring the choice of seed probabilities into the learning process.

Based on previous work, they suspected that one specific subset of elements within the discriminator network (a “layer” in the system) should contain the crucial information needed to improve the generator’s performance. The team gave their quantum circuit the task of representing that layer’s state as it evolved, and feeding that information as a seed to the generator network for repeated trials during the adversarial process. The quantum circuit acted like a spy for a baseball team, stealing information from the other team as both teams continually update their strategies. The advantage of a quantum circuit is that it can represent a much wider range of states than a comparable classical system.

Their small quantum-computing device is based on a set of eight qubits stored in trapped ytterbium ions. With this platform, the researchers first trained the full machine-learning algorithm on a standard data set of handwritten digits widely used in the industry. The data include 60,000 images of handwritten single digits. Once the network was trained, the team tested its ability to generate new examples of handwritten digits.

The generated digits provide a resolution significantly better than digits previously produced using other quantum machine-learning devices. As the researchers emphasize, this performance is not obviously better than what can be achieved with the best classical machine-learning system. But the work demonstrates that a quantum-enhanced version of a specific algorithm can perform better than the conventional version and can also be run on today’s quantum devices.

“The success demonstrated in this example should open up the design space for machine learning employing noisy quantum devices,” says quantum physicist Norbert Linke of the University of Maryland in College Park. In the future, he suggests, the best machine-learning systems may well involve both classical and quantum aspects. Similarly, “the best chess player in the world is neither a human, nor a computer, but a human-assisted machine,” he says.

–Mark Buchanan

Mark Buchanan is a freelance science writer who splits his time between Abergavenny, UK, and Notre Dame de Courson, France.

References

- M. S. Rudolph et al., “Generation of high-resolution handwritten digits with an ion-trap quantum computer,” Phys. Rev. X 12, 031010 (2022).