In the Brain, Function Follows Form

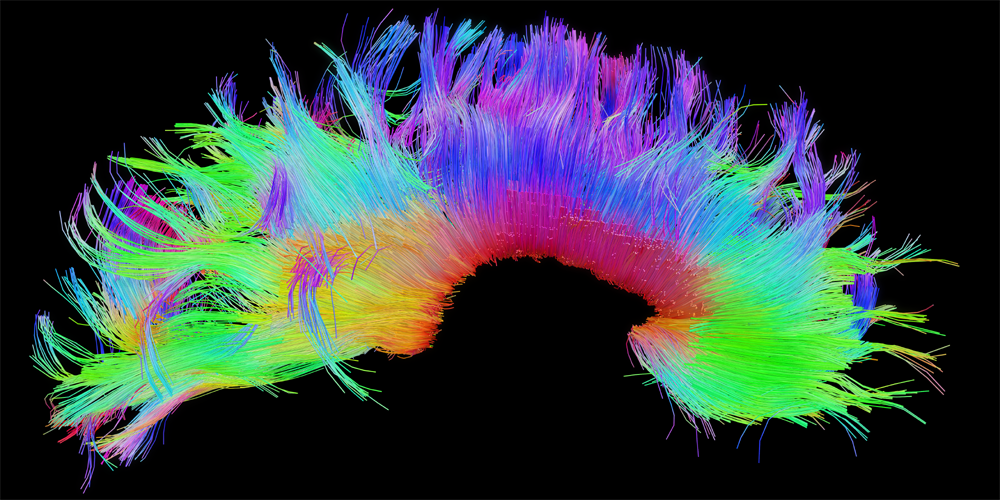

Developing a physics for the brain is a daunting task that has obsessed scientists since large-scale brain-activity measurements became available half a century ago. Advances in such techniques are spurring swift progress in the field. Magnetic resonance imaging (MRI), in particular, offers two extremely valuable types of data. First, a variant of MRI called diffusion tensor imaging (DTI) provides a way to construct a map of the major connections of the brain—the brain’s physical “wiring” (Fig. 1). Second, functional MRI (fMRI) can measure where in the brain activity has just taken place by observing what is termed the blood-oxygen-level-dependent (BOLD) signal. Leon Weninger at the University of Pennsylvania and his colleagues have now combined these methods with tools from network control theory to describe brain dynamics in terms of the information content of specific patterns of brain activity or “brain states,” and of the energy cost of transitions between such states [1]. The paper offers compelling new strategies, derived from physics, to interpret brain structure and function.

The structure and activity measurements afforded by DTI and BOLD fMRI allow scientists to analyze two basic properties of the brain. First of all, to function properly, the brain has presumably to observe itself: Parts of the brain have to be able to estimate the state of other parts of the brain in order to reconstruct what is going on both within and outside the brain. The analog in control engineering is that parts of the brain must be observable. The brain also needs to control portions of itself in order to read this article, generate speech and motor functions, and try hard to retrieve memories [2]. Rudolf Kalman first defined the concepts of observability and controllability of linear systems in 1960 [3]. In 1974, Ching-Tai Lin expanded Kalman’s theory to account for what topologies of networks are structurally controllable—asking how the absence of connections in portions of a network would render it uncontrollable in Kalman’s sense [4]. But brains are floridly nonlinear and establishing observability and controllability for complex nonlinear networks is much more difficult than it is for linear systems [5]. Analyzing nonlinear observability and controllability require the use of more complex mathematics such as Lie derivatives and brackets, as well as the introduction of group theoretic concepts, since symmetries can destroy observability and controllability in fascinating ways [6].

Weninger and his colleagues investigate brain-activity-state controllability by asking a set of fundamental questions relating connectivity-constrained state transitions to other brain states. They characterize a given state through a measure of information (how probable the state is to be observed within the ensemble of brain regions), and they use a fundamental result derived from Kalman’s work to establish whether a network’s connectivity renders it controllable. Specifically, they employ a controllability Gramian matrix that combines the relationship between network topology and control input. Such controllability provides critical constraints on the brain’s state transitions.

The scope and audaciousness of this project are mind boggling. The team applies their approach to a massive dataset from the Human Connectome Project [7]. They subdivide the brain regions from fMRI scans into activity parcels, and they quantify the Shannon information in the ensemble of parcels according to the inverse of the probability of achieving a given state during rest versus during the performance of a set of cognitive tasks. States with high information content are those that are statistically rare during rest. The researchers then calculate the energy required to transition from one state to another. They report several main findings: (1) the information content depends upon cognitive context (compared to motor tasks, social tasks are very challenging!); (2) the energy required to transition to high-information (rare) states is greater than that required to transition between low-information (common) states; and (3) the state transitions show that brain wiring is optimized to render this dynamical system efficient. The average controllability was found to correlate with the ease of transitioning to high-information states.

Many questions that go beyond the scope of the study are worth bringing up for those who wish to think more about this characterization of thought processes. For example, do the high-energy transitions to high-information states reflect cognitive effort? Surely, while writing this article, my brain is engaged in tasks more complex than what we would expect from a statistical ensemble of elements in a Boltzmann-like distribution of energy states. But could we use such an information-control theoretic description of brain activity and mental effort to shed new light on cognitive dysfunction and mental health? An information-based dynamic biomarker for cognitive disorders would be very useful if it were to emerge from such a framework.

One should bear in mind some of the study’s limitations, however. One such limitation relates to the detailed mechanics behind the brain’s energy balance. The brain is an open system that consumes about 20% of the body’s resting metabolic energy. Most of that energy is dedicated to reestablishing ion gradients across nerve cells and repackaging neural transmitters after activity [8]. During activity, that stored energy is dissipated almost instantly (in milliseconds); the brain then recharges such energy stores more slowly (in seconds). The BOLD fMRI signal reflects this slower replenishment, not the rapid dissipation that occurs during brain activity. It is not known whether the energy required to reach a high-information state is stored independently of the improbability of reaching that state through stochastic or resting activity.

Another limitation arises from the maximum spatial resolution that is currently possible in MRI: both the BOLD signal and DTI pathways measure local regions of interest much larger than individual neurons. There is, therefore, a tremendous amount of subgrid physics going on in the brain that is not captured by these measures. Furthermore, much of the brain’s connectivity is very much one-way for a given nerve fiber or bundle—there is no reversibility or detailed balance in these ensemble dynamics. And a key hallmark of cognitive function is synchronization [9], which is typically measured electrically; yet synchrony implies symmetries in networks, and such symmetry can destroy controllability [10].

The work by Weninger and colleagues offers compelling new strategies to investigate brain dynamics and cognitive states and will surely lead to other fascinating questions in our minds about this most complex of organs.

References

- L. Weninger et al., “Information content of brain states is explained by structural constraints on state energetics,” Phys. Rev. E 106, 014401 (2022).

- S. J. Schiff, Neural control engineering: The emerging intersection between control theory and neuroscience (MIT Press, Cambridge, 2012)[Amazon][WorldCat].

- R. E. Kalman, “A new approach to linear filtering and prediction problems,” J. Basic Eng. 82, 35 (1960).

- C. T. Lin, “Structural controllability,” IEEE Trans. Automat. Contr. 19, 201 (1974).

- Y. Y. Liu et al., “Controllability of complex networks,” Nature 473, 167 (2011).

- A. J. Whalen et al., “Observability and controllability of nonlinear networks: The role of symmetry,” Phys. Rev. X 5, 011005 (2015).

- D. C. Van Essen et al., “The WU-Minn Human Connectome Project: An overview,” NeuroImage 80, 62 (2013).

- P. Lennie, “The cost of cortical computation,” Current Biology 13, 493 (2003).

- P. J. Uhlhaas et al., “Neural synchrony in cortical networks: History, concept, and current status,” Front. Integr. Neurosci. 3 (2009).

- L. M. Pecora et al., “Cluster synchronization and isolated desynchronization in complex networks with symmetries,” Nature Commun. 5 (2014).