Information as Thermodynamic Fuel

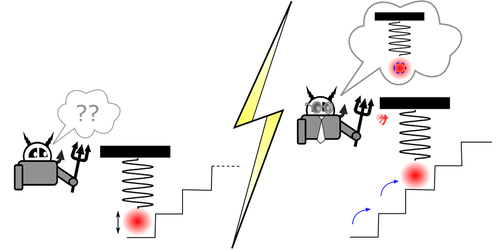

An information engine uses information to convert heat into useful energy. Such an engine can be made, for example, from a heavy bead in an optical trap. A bead engine operates using thermal noise. When noise fluctuations raise the bead vertically, the trap is also lifted. This change increases the average height of the bead, and the engine produces energy. No work is done to cause this change; rather, the potential energy is extracted from information. However, measurement noise—whose origin is intrinsic to the system probing the bead’s position—can degrade the engine’s efficiency, as it can add uncertainty to the measurement, which can lead to incorrect feedback decisions by the algorithm that operates the engine. Now Tushar Saha and colleagues at Simon Fraser University in Canada have developed an algorithm that doesn’t suffer from these errors, allowing for efficient operation of an information engine even when there is high measurement noise [1].

To date, most information engines have operated using feedback algorithms that consider only the most recent bead-position observation. In such a system, when the engine’s signal-to-noise ratio falls below a certain value, the engine stops working.

To overcome this problem, Saha and colleagues instead use a “filtering” algorithm that replaces the most recent bead measurement with a so-called Bayesian estimate. This estimate accounts for both measurement noise and delay in the device’s feedback.

The team shows that they can use their algorithm to run an information engine when the signal-to-noise ratio is low. However, because the Bayesian estimate is calculated using all past measurements on the engine, this algorithm requires more storage capacity than others. Thus, as in many scientific problems involving measurements, a trade-off emerges, in this case between memory cost and energy extraction.

–Agnese Curatolo

Agnese Curatolo is an Associate Editor at Physical Review Letters.

References

- T. K. Saha et al., “Bayesian information engine that optimally exploits noisy measurements,” Phys. Rev. Lett. 129, 130601 (2022).