Cracking the Challenge of Quantum Error Correction

Errors are the bête noire of quantum computing. They can come from material defects, thermal fluctuations, cosmic rays, or other sources, and they only become more meddlesome the larger a quantum processor is. But the demonstration of an unprecedented ability to correct quantum errors may signal the end of this trend. A team of researchers at Google Quantum AI in California has used their latest quantum processor, dubbed Willow, to demonstrate a “below-threshold” error-correction method—one that actually performs better as the number of quantum bits, or qubits, increases [1]. The team also showed that this new quantum chip could solve in 5 minutes a benchmark test that would take 10 septillion (1025) years on today’s most powerful supercomputers.

“I find it astonishing that such an exquisite level of control is actually now possible, and that quantum error correction really seems to behave as we predicted,” says quantum information researcher Lorenza Viola of Dartmouth College, New Hampshire. The demonstration that error correction increases the length of time over which a qubit can store information “is a notable milestone,” says theoretical physicist John Preskill of CalTech. (Neither Viola nor Preskill were involved in the work.)

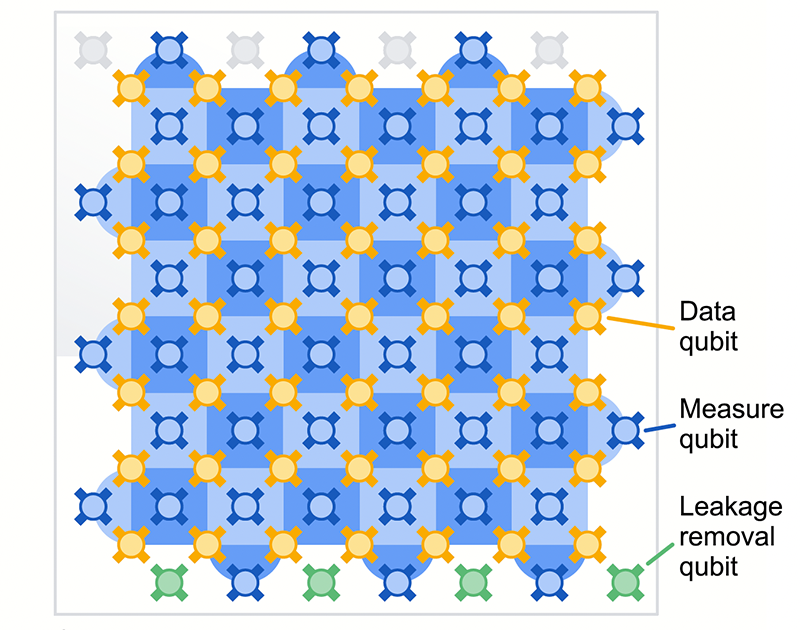

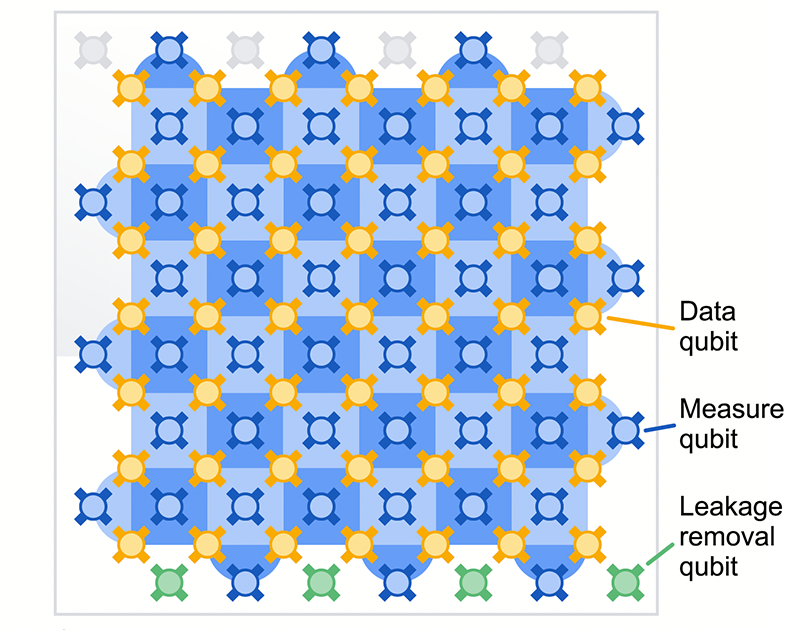

The basic idea of error correction is that of having many “physical” qubits work together to encode a single “logical” qubit. Much like error correction in classical devices, its quantum counterpart exploits redundancy: One logical qubit of information isn’t stored in a single physical qubit but is spread onto an entangled state of the physical qubits. The challenge is identifying errors in the fragile quantum states without introducing additional errors. Researchers have developed sophisticated methods, called surface codes, that can correct errors in a 2D planar arrangement of qubits (see Viewpoint: Error-Correcting Surface Codes Get Experimental Vetting).

The surface-code approach requires significant hardware overhead—additional physical qubits and quantum gates that perform error-correcting operations—which in turn introduces more opportunities for errors. Since the 1990s, researchers have predicted that error correction can only provide a net improvement if the error rate of the physical qubits is below a certain threshold. “There is no point in doing quantum error correction if you aren’t below threshold,” says Julian Kelly, Google’s Director of Quantum Hardware.

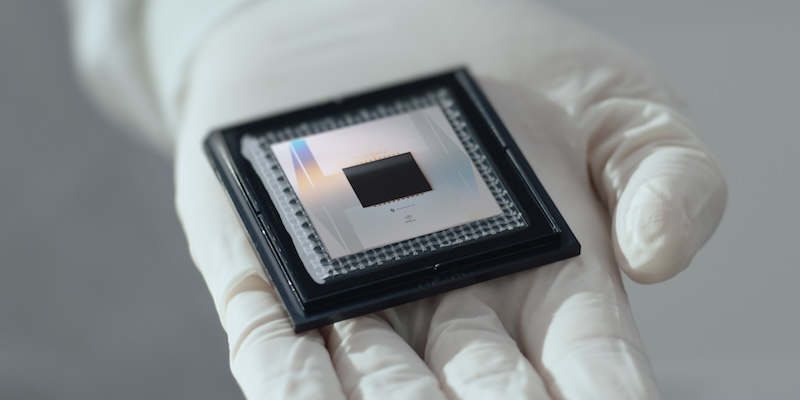

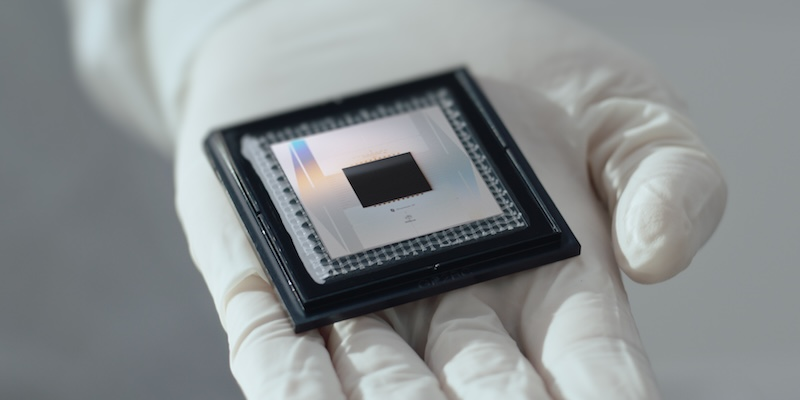

Previous error-correction efforts typically made the error rate worse as more qubits were added, but the Google AI researchers have reversed this direction. “We are finally below the threshold,” says Michael Newman, a research scientist at Google Quantum AI. The milestone was achieved in an experiment where the Willow chip, a 2D array of 105 superconducting qubits, was used to store a single qubit of information in a square grid of physical “data” qubits. To verify that the error rate scaled as desired, they varied the size of this grid from 3 × 3 to 5 × 5 to 7 × 7, corresponding to 9, 25, and 49 data qubits, respectively (along with other qubits that perform error-correcting operations).

For each step up in grid size, they found that the error rate went down by a factor of 2 (an exponential decrease), reaching a rate of 1.4 × 10−3 errors per cycle of error correction for the 7 × 7 grid. For comparison, a single physical qubit experiences roughly 3 × 10−3 errors over a comparable time period, which means that the 49-qubit combination gives fewer errors than just one physical qubit. “This shows the ability of error correction to really be more than the sum of its parts,” Newman says. What’s more, the observed exponential suppression implies that increasing the grid size further should give lower and lower error rates.

The key ingredient to the result was an improvement in the performance of the physical qubits. Compared to the qubits in Google’s previous quantum processor, Sycamore, Willow’s qubits feature an up to fivefold increase in qubit coherence time and a twofold reduction in error rate. Kevin Satzinger, also a research scientist at Google Quantum AI, says that the boost in physical qubit quality can be attributed to a new, dedicated fabrication facility, as well as to improved design of the processor’s architecture through so-called gap engineering.

To assess whether their Willow processor had “beyond-classical” abilities, the team used it to perform a task called random circuit sampling (RCS), which generates samples of the output distribution of a random quantum circuit. While RCS isn’t of any practical use, it is a leading benchmark for evaluating the performance of a quantum computer, as it presents a computational task considered to be intractable by classical supercomputers. In the RCS test, Willow achieved a clear quantum advantage by quickly performing a computation that a classical supercomputer would not be able to complete on timescales vastly exceeding the age of the Universe.

Google has outlined a road map toward a large-scale, error-corrected quantum computer, which involves scaling their processor up to one million physical qubits and lowering logical error rates to less than 10−12 errors per cycle. Such a computer could tackle a variety of classically unsolvable problems in drug design, fusion energy, quantum-assisted machine learning, and other fields, the researchers say. To make this scale-up possible, an important research direction is further improving the underlying physical qubits, says Satzinger. “Thanks to the demonstrated leverage of quantum error correction, even a modest improvement in the physical qubits can make orders-of-magnitude difference.”

Viola says that the result solidifies the hope that fault-tolerant computations may be within reach, but further progress will require scrutinizing the possible physical mechanisms leading to logical errors. “As the error rates are pushed to increasingly smaller values, new or previously unaccounted for noise effects and error-propagation mechanisms may become relevant,” she says. “We still have a long way to go before quantum computers can run a wide variety of useful applications, but this demonstration is a significant step in that direction,” says Preskill.

–Matteo Rini

Matteo Rini is the Editor of Physics Magazine.

–Michael Schirber

Michael Schirber is a Corresponding Editor for Physics Magazine based in Lyon, France.

References

- Google Quantum AI and Collaborators, “Quantum error correction below the surface code threshold,” Nature .