Improving Electronic Structure Calculations

Every Friday, Physical Review Letters sends me an email with the contents of its latest issue. This past year, I’ve read of the first detection of gravitational waves and a candidate particle whose existence would imply a fifth force of nature. With such obviously great physics going on, should we care about a report in PRL that theoretical chemists have found a new way to accurately “predict” the energy needed to break a molecule into its constituent atoms [1]? The answer is yes, because this seemingly modest development, from Jannis Erhard, Patrick Bleiziffer, and Andreas Görling at the University of Erlangen-Nuremberg, Germany, could open the door to predicting a multitude of material properties, more accurately and reliably than is currently possible. This would help scientists find better materials for batteries, solar cells, and many other applications.

The new work builds on a computational tool called density-functional theory (DFT), one of the great success stories of physics. Founded by Walter Kohn and co-workers in 1965 [2], DFT is the workhorse for calculating the electronic structure of all matter under everyday conditions. The properties of a molecule or solid, such as its bond lengths, binding energy, phonon spectrum, or lattice structure, are determined by its electronic structure. There are different ways to determine this structure for a given system. The most accurate is to determine the many-electron wave function by solving the Schrödinger equation. The advantage of DFT is that it can perform calculations relatively quickly and with high (but not perfect) accuracy. It can therefore be used to find the properties of large molecules (of about 500 atoms) and many crystalline solids (with about 100 atoms per unit cell), all on a modern laptop.

For the past two decades, DFT has been a standard tool for routine calculations in chemistry, and it is currently revolutionizing materials science. In 2015 alone, more than 30,000 scientific papers were published that used DFT [3], and valid predictions are becoming commonplace. For example, based on DFT predictions [4], researchers discovered in 2015 that hydrogen sulfide under pressure has a record high superconducting transition temperature (203 K) [5].

However, DFT calculations obey the GIGO principle: Garbage in, garbage out. Every practical calculation uses an approximation for a small component of the total electronic energy, called the exchange-correlation energy, which arises from the electrons’ interactions with each other. The properties predicted by DFT will only ever be as good as this approximation. At least two thirds of calculations use one of a few standard approximations whose predictive successes and failures are well documented.

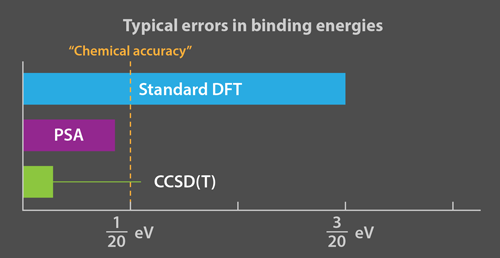

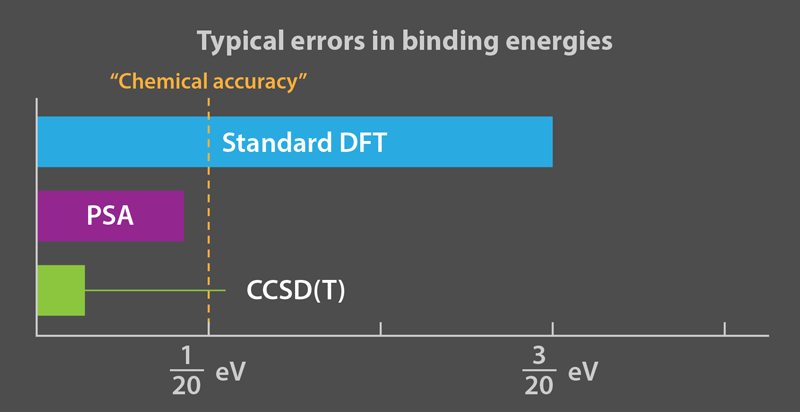

Although the imperfect accuracy of DFT is sufficient for much of materials science, chemistry, and solid-state physics, it fails when chemical reactions depend sensitively on molecular binding energies (typically, a few eV). The term “chemical accuracy” implies computational errors that are less than 0.05 eV; the errors from standard DFT are typically larger. To achieve high accuracy, the field of quantum chemistry is devoted to systematic approaches to directly solving the Schrödinger equation. Today’s most popular approach, called CCSD(T), usually yields an energy error much less than 0.05 eV. But because the computational cost of CCSD(T) and other quantum chemistry methods scales so poorly with the number of electrons, they are limited mostly to small molecules. A recent paper announced the first such calculation of the energy of a benzene crystal to within 0.01 eV [6].

It was the accuracy of these and similar methods for small molecules that first validated DFT’s accuracy, in limited cases, leading to its widespread adoption in chemistry. So, many researchers working on electronic structure would love to bridge the gap between low-cost but unreliable standard DFT calculations and high-cost quantum chemical calculations, with a method that works for both molecules and materials.

A promising candidate is the random phase approximation (RPA), which constructs the exchange-correlation energy from a simple approximation to the electronic response of the system to an external potential, using the electron orbitals derived from a standard DFT calculation. While RPA calculations can predict many quantities with high accuracy, including van der Waals interactions between and within both molecules and solids, a sticking point has been their underestimate of binding energies [7]. In principle, RPA can be made exact using corrections derived from time-dependent DFT (TDDFT). But practical TDDFT approximations may worsen the energetics, or introduce instabilities in the response of the system to external perturbations.

That’s where the new work by Erhard et al. comes in. They eliminate these instabilities by expanding the TDDFT corrections to RPA in powers of the electron-electron repulsion. Their power series approximation (PSA) involves three parameters [1]. Being pragmatic chemists, instead of deriving these parameters, they simply fit them to a “training set” of binding energies of small molecules, and test whether the fitted parameters predict several properties of other molecules accurately. The PSA approach costs little more computational time than RPA, but is much more stable and accurate (Fig. 1).

The team illustrates their method on a nitrogen molecule because its triple bond exhibits a high level of static correlation (loosely, electron correlations that arise from the the symmetry of the molecule), which increases as the molecule is stretched. If a computational tool can handle N2 well, it can tackle most main-group chemistry correctly. Erhard et al. have provided sufficient evidence to suggest their method could work for many molecules.

What happens now? Many electronic structure groups already have working RPA codes. Such groups will try the power series approximation from Erhard et al., running it through a battery of tests to check its accuracy. If no major flaw appears, it will become a new standard tool for determining highly accurate electronic structures, at perhaps only 1 order of magnitude additional computational cost relative to generic DFT, but 1 order of magnitude less than CCSD(T). If it works for semiconductors, the approach would be a powerful tool for materials science, and it could be incredibly useful in finding new materials for energy applications. While perhaps not as awe-inspiring as colliding black holes, it is still physics with a bang.

This research is published in Physical Review Letters.

Acknowledgment

K. B. is supported by Grant No. NSF-CHE-1464795.

References

- J. Erhard, P. Bleiziffer, and A. Görling, “Power Series Approximation for the Correlation Kernel Leading to Kohn-Sham Methods Combining Accuracy, Computational Efficiency, and General Applicability,” Phys. Rev. Lett. 117, 143002 (2016).

- W. Kohn, “Nobel Lecture: Electronic Structure of Matter—Wave Functions and Density Functionals,” Rev. Mod. Phys. 71, 1253 (1999).

- A. Pribram-Jones, D. A. Gross, and K. Burke, “DFT: A Theory Full of Holes?,” Annu. Rev. Phys. Chem. 66, 283 (2015).

- D. Duan, Y. Liu, F. Tian, D. Li, X.Huang, Z. Zhao, H. Yu, B. Liu, W. Tian, and T. Cui, “Pressure-Induced Metallization of Dense (H2S)2H2 With High-Tc Superconductivity,” Sci. Rep. 4, 6968 (2014).

- A. P. Drozdov, M. I. Eremets, I. A. Troyan, V. Ksenofontov, and S. I. Shylin, “Conventional Superconductivity at 203 Kelvin at High Pressures in the Sulfur Hydride System,” Nature 525, 73 (2015).

- J. Yang, W. Hu, D. Usvyat, D. Matthews, M. Schutz, and G. K.-L. Chan, “Ab Initio Determination of the Crystalline Benzene Lattice Energy to Sub-Kilojoule/Mole Accuracy,” Science 345, 640 (2014).

- H. Eshuis, J. E. Bates, and F. Furche, “Electron Correlation Methods Based on the Random Phase Approximation,” Theor. Chem. Acc. 131, 1084 (2012).