A Random Approach to Quantum Simulation

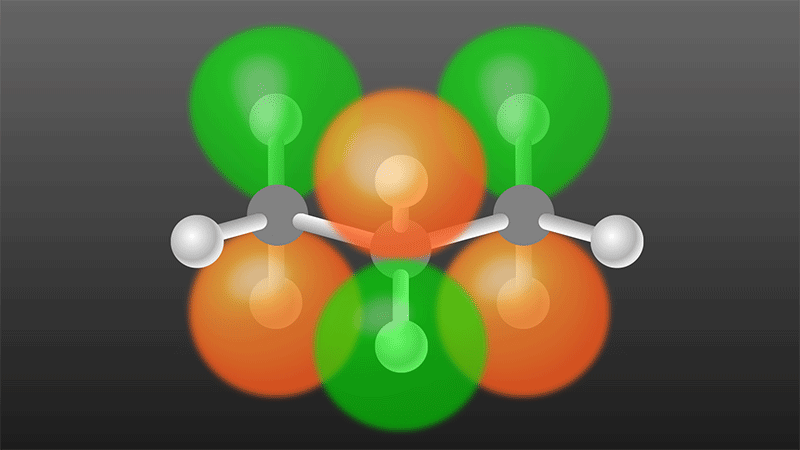

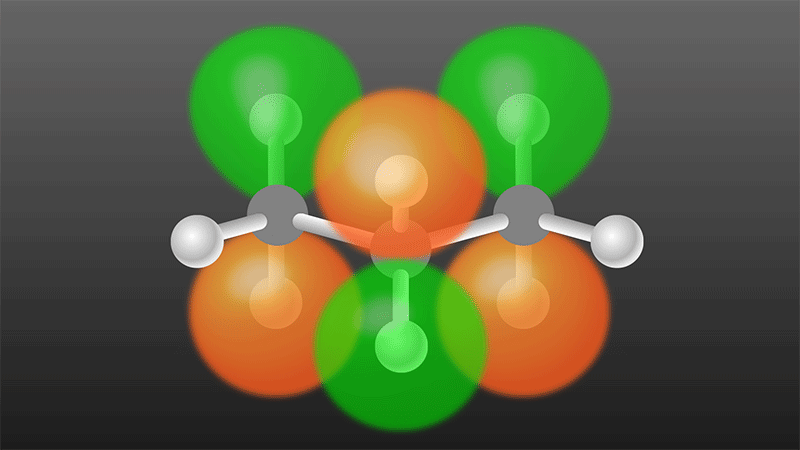

Quantum computers process information in radically different ways than regular computers. But how do you take advantage of that capability? You can’t just run a normal program on a quantum computer—you need a specialized algorithm to realize the quantum advantage. Perhaps the most promising application for such algorithms is the simulation of quantum systems like molecules or materials, though an ongoing challenge is to design algorithms that can be run in a practical amount of time. An approach proposed by Earl Campbell of the University of Sheffield in the UK could speed up the simulation of certain molecules [1]. His algorithm uses a random—as opposed to deterministic—sequence of operations. It may outperform other approaches when a molecule’s energy is determined by many small contributions, and Campbell considers propane, carbon-dioxide, and ethane as test cases.

The kind of simulation Campbell considers is one where you know the allowed orbitals on a molecule and want to figure out the way electrons occupy them. This occupancy involves superpositions of electron configurations that can vary with time. Mathematically, this time evolution is obtained from an exponential of the molecule’s Hamiltonian, which is given by a sum of terms, each corresponding to a different contribution to the molecule’s energy.

The first algorithm for simulating this kind of time evolution on a quantum computer was proposed by Seth Lloyd in 1996. His algorithm expressed the Hamiltonian as a sum of simple terms Hj and then approximated the time evolution as a product of exponentials of those terms [2]—a method known as the Lie-Trotter decomposition. Building on Lloyd’s approach, researchers later figured out how to estimate a molecule’s energy [3], which is needed to calculate chemical reaction rates. There was a hitch, though: If N orbitals are needed to describe a molecule, then the number of terms in the Hamiltonian will scale as N4. According to early estimates, the number of operations—or “gates”—that a quantum computer would need to simulate the molecule would then scale as N10 [4, 5]. Since most interesting systems—those that can’t already be simulated on classical computers—have an N of more than 100, the complexity of such a quantum simulation would be completely impractical.

One way to rein in the complexity is to use a more sophisticated sequence of exponentials. Quantum simulation algorithms like Lloyd’s split the total evolution time into many short time intervals. Normally these time intervals have to be very short to obtain an overall simulation that is sufficiently accurate, but the so-called Lie-Trotter-Suzuki decomposition provides similar accuracy with longer time intervals [6]. Compared with other approaches, this decomposition involves a smaller number of time intervals for a fixed total evolution time, which means fewer exponentials and therefore fewer gates. Moreover, the complexity of the simulation scales more favorably with the total time or level of accuracy [7]. But the Lie-Trotter-Suzuki method still has the problem of a large number of terms in the Hamiltonian. And even though some of these terms may be small enough to omit, dropping too many would reduce the accuracy of a simulation.

Campbell proposes a radically new approach: Instead of using all of the Hj terms in each time interval, randomly select just one at a time. In his recipe, larger terms are more likely to be chosen than smaller ones, so that even though most or all of the terms get used, the smaller ones are used less frequently. The resulting complexity scales with the sum of the sizes of the terms in the Hamiltonian, rather than the number of them—an enormous advantage for quantum chemistry problems involving millions of terms. The drawback is that by eschewing the full usage of terms in the earlier deterministic approaches, such as Lie-Trotter-Suzuki, the complexity scales somewhat poorly with the total time and error. Therefore, for evolutions simulated over a long time or requiring high precision, his method would have worse performance than the Lie-Trotter-Suzuki approach.

A seeming contradiction of Campbell’s method is that the error is significantly reduced if you effectively “forget” the sequence of terms used and represent the molecule’s state by averaging over the possible sequences. At first, this feature seems nonsensical: How can there be less error if you have less information? In fact, it turns out that the error estimate based on not knowing the sequence is the one that is realistic. The reason is that to obtain useful information from a quantum simulation, you don’t just evolve the system once. You need to run the simulation many times to sample the statistics of the system. In each simulation, a different random sequence would be used, so the errors from using particular sequences average out. A separate issue about error is how best to quantify it when estimating energies. That’s because the methods for estimating energies rely on an evolution over a long controlled time instead of samples from repeated evolutions over shorter times.

The numbers of gates in this work is still quite large, over a trillion for the molecules Campbell considered (propane, carbon dioxide, and ethane). But those numbers might drop if Campbell’s approach is combined with other methods, such as the Lie-Trotter-Suzuki decomposition. Another possibility is taking a cue from “quantum signal processing” (QSP) techniques [8]. QSP is analogous to Campbell’s approach in that it is aperiodic, but instead of being random, QSP uses a sequence chosen by a sophisticated algorithm. This method gives the best possible complexity scaling in time and error when applied to alternative models of simulation like linear combinations of unitaries [9] or quantum walks [10]. Perhaps Campbell’s approach could be derandomized and mimic QSP by harnessing a sophisticated algorithm to choose a complexity-minimizing sequence. Finding the right deterministic sequence would require a theoretical breakthrough, but it could conceivably yield an algorithm that combines the strengths of QSP and randomization.

This research is published in Physical Review Letters.

References

- E. Campbell, “Random compiler for fast Hamiltonian simulation,” Phys. Rev. Lett. 123, 070503 (2019).

- S. Lloyd, “Universal quantum simulators,” Science 273, 1073 (1996).

- A. Aspuru-Guzik, A. D. Dutoi, P. J. Love, and M. Head-Gordon, “Simulated quantum computation of molecular energies,” Science 309, 1704 (2005).

- J. D. Whitfield, J. Biamonte, and A. Aspuru-Guzik, “Simulation of electronic structure Hamiltonians using quantum computers,” Mol. Phys. 109, 735 (2011).

- D. Wecker, B. Bauer, B. K. Clark, M. B. Hastings, and M. Troyer, “Gate-count estimates for performing quantum chemistry on small quantum computers,” Phys. Rev. A 90, 022305 (2014).

- M. Suzuki, “General theory of fractal path integrals with applications to many-body theories and statistical physics,” J. Math. Phys. 32, 400 (1991).

- D. W. Berry, G. Ahokas, R. Cleve, and B. C. Sanders, “Efficient quantum algorithms for simulating sparse Hamiltonians,” Commun. Math. Phys. 270, 359 (2006).

- G. H. Low and I. L. Chuang, “Optimal Hamiltonian simulation by quantum signal processing,” Phys. Rev. Lett. 118, 010501 (2017).

- D. W. Berry, A. M. Childs, R. Cleve, R. Kothari, and R. D. Somma, “Exponential improvement in precision for simulating sparse Hamiltonians,” Forum Math. Sigma 5, e8 (2017).

- D. W. Berry and A. M. Childs, “Black-box Hamiltonian simulation and unitary implementation,” Quantum Inf. Comput. 12, 29 (2012).