The Importance of Investing in Physics

With large-scale COVID-19 vaccination programs now underway, some optimism about the new year seems justified. But even when the medical emergency phase of the pandemic is behind us, its economic consequences will linger for years to come. Funding for “pure” research is hard fought even in the boom years—can it still be justified in the straitened times ahead? In two essays, Cherry Murray of the University of Arizona and Nick Treanor of the University of Edinburgh, UK, give reasons to believe that it can. Using examples of technological and industrial impacts, Murray argues that investing in physics research makes economic sense. In a separate essay, Treanor explains that even without such tangible benefits, physics research can be justified because the insights that it provides into the world are especially deep. — Marric Stephens

Arguing with Numbers

COVID-19 has put the US into the steepest recession since WWII. Because of this recession, national governments seeking to stimulate the economy may not consider it important to maintain and enhance their long-term investment in scientific research, especially physics research. I argue that the return on such an investment is high and that robust funding for physics research should be maintained, as this research can drive the economy and create jobs.

Having spent many years in high-tech industry, I see three main reasons for maintaining and growing the funding for physics research during a major depression. The first is that scientific advances contribute to long-term economic growth via the technological innovations that they deliver. Prominent examples are those developments in electronics and optics that underlie our computer and telecommunications technologies. But economically important innovations have also been made in transportation, energy technologies, building technologies, food production, water and sanitation, healthcare, and, recently, the digital and biotech sectors.

The most obvious route to realizing the economic potential of innovations in these fields is via investment in “use-inspired” physics research—projects that have the direct goal of creating new technologies or advancing existing ones. Physics research underlies the design of new materials with desired properties (consider, for example, graphene and other two-dimensional materials). Such research has been crucial to achieving cost reductions in light-emitting diodes, photovoltaic cells, high-voltage semiconductors, and wind turbines, which are all needed for a transition to sustainable energy. In multidisciplinary research, physics expertise has contributed to new manufacturing and analysis techniques, leading to further advances in semiconductors, battery technology, sanitation, and health.

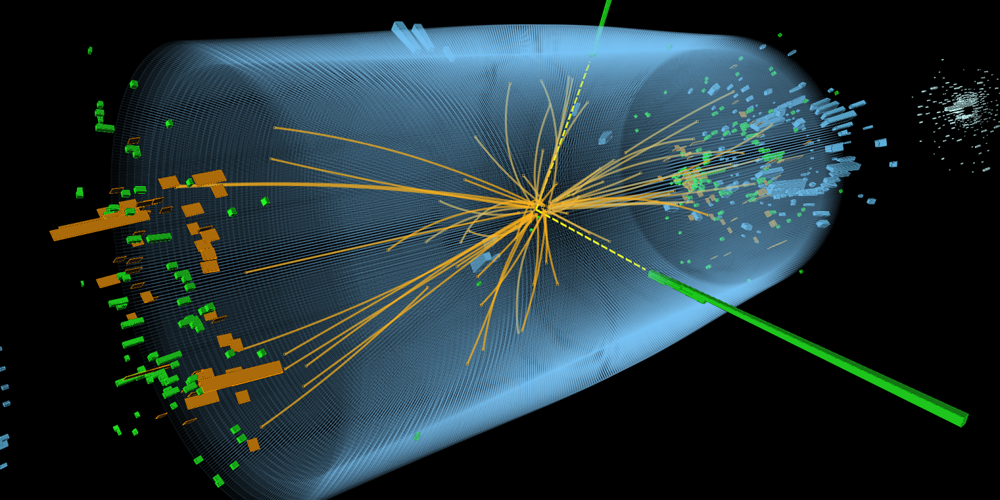

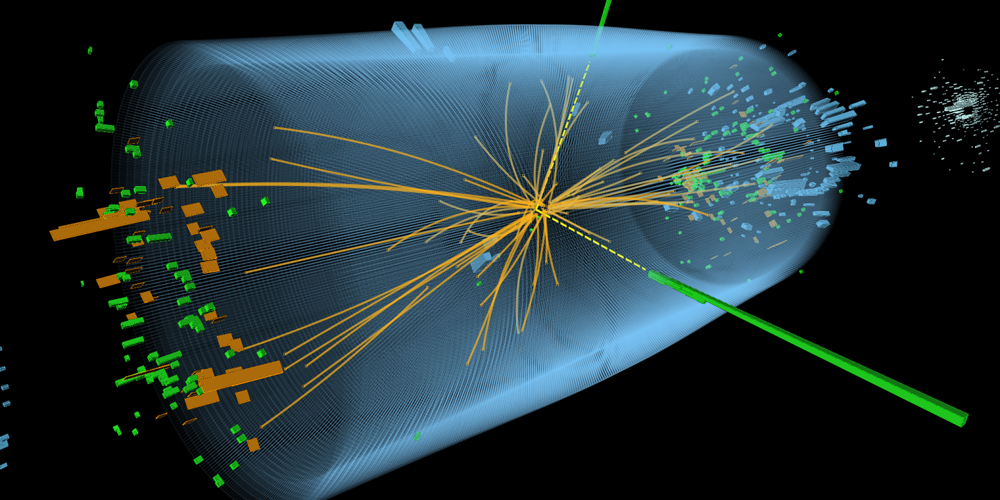

The second reason for keeping and growing funding also deals with innovative technologies—those that derive indirectly from fundamental physics research whose aim is to satisfy curiosity about the Universe. As a classic example, consider the need for the global sharing of data from high-energy-physics particle accelerators at CERN in the 1980s: the solution to this problem led directly to the World Wide Web and, several decades later, to the digital revolution.

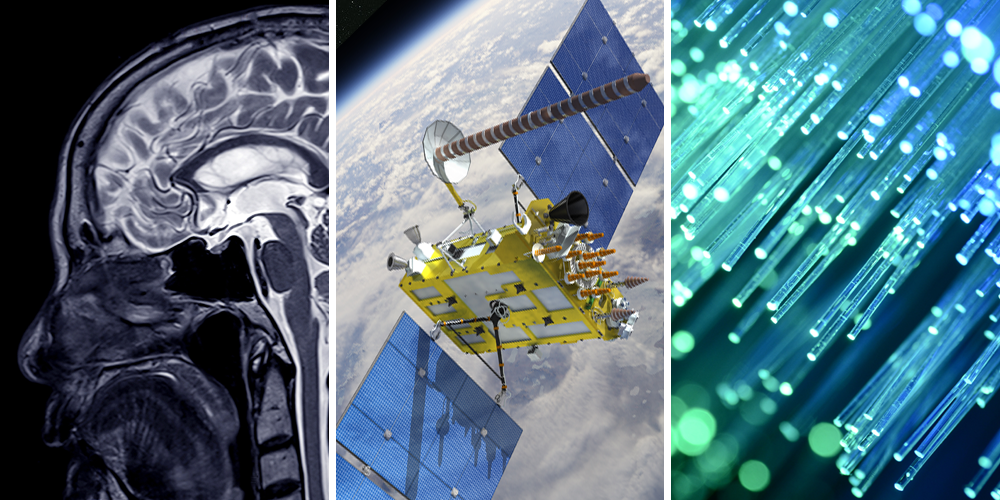

In another example that spans decades and disparate physics concepts, pioneering research into atomic clocks and ultraprecise spectroscopy in the 1950s, combined with special and general relativity, led to the Global Positioning System. First deployed in the 1970s for satellite tracking, in the half-century since, GPS has become ubiquitous in navigation systems and smart devices on the ground.

Other applications stem from research into subwavelength imaging. Invented in the 1990s as a way of overcoming the fundamental resolution limit of conventional lenses, this technique now enhances nanotechnology, biology, and the miniaturization of components such as transistors and wireless elements that enable the Internet of Things. The medical realm has also been transformed by advances in pure physics: The discovery of nuclear magnetic resonance in 1938 led to the magnetic resonance imaging machines available in almost every clinic today, and accelerator technology invented in the 1950s is now routinely used for drug discovery and cancer therapy.

Breakthroughs made in the middle of the last century could also facilitate the next significant physics-driven biomedical application—optogenetics. This recent technology—rooted in developments in laser technology and in the discovery of green fluorescent proteins—allows for an improved understanding of brain function and will enable future treatments of neurological disorders.

My final argument for continued funding relates not to the innovations themselves but to the people needed to exploit them. Physics research programs generate the technical workforce demanded by the digital economy. Roughly 75% of new bachelor’s-degree graduates and 50% of new Ph.D.s in physics take jobs in industry. Yet these workers are in short supply, with high-tech companies struggling to find enough qualified people to fill vacancies. Government stimulus has a role here because fundamental-physics research funding encourages young people to study physics, and the number of physics Ph.D.s obtained in the US closely follows the levels of this funding. — Cherry Murray

The Fundamental Value of Knowledge

Imagine it is 1750, and you want to know Earth’s density. Reading a book or asking an expert won’t help, as the value is still undetermined. The only way to find out is to do the work—the measurements and the calculations—yourself. Would it be worth the effort? If so, why? Would the value lie in the knowledge that you would gain or would it be in the process of working out the answer?

In the 1770s, building on a suggestion by Newton, a team spent two years on the rain-swept flanks of Schiehallion—a mountain in the highlands of Scotland—undertaking exactly that task. They were working on the premise that, if they could measure the mass of the mountain and determine the deflection of a pendulum hanging near its lower slopes, they could infer the mean density of Earth. They chose Schiehallion because its shape and presumed uniform geological composition promised to make the work easier. But it was still backbreaking labor, with enormous physical, mental, and material demands.

In the end, they did manage to answer their question with a fair degree of accuracy. But as with most great research projects, their conclusion brought with it some unanticipated discoveries. To determine the deflection of the pendulum, they had to refine the use of the zenith sector—a telescope designed for precise measurements of astronomical latitude. To determine the mass of Schiehallion, they had to invent the concept of topographical lines.

In hindsight, these spin-offs seem to make the couple of years that the group invested in their project time well spent. Knowledge is power, and when we know things, we can do things—things we care about and things that are practically valuable to us. If this were the only justification for the effort, however, then whether it was worth doing would have to be assessed by purely practical benefits weighed against opportunity cost. What practical benefit came (and will yet come) from these discoveries? What else could the scientists have done with their time? Could their resources have been channeled more effectively?

Science is expensive, and we have to decide where and how to direct time, energy, and money. For all we know, the puzzles that interest physicists could have less practical benefit than those of interest to other scientists. Assume for a moment that that’s the case. Is there any reason to think that physics deserves special support?

I think there is, and my reason for thinking that lies not with physics but with philosophy, my discipline. I am not so reckless as to try to tell the full story here, in a magazine for physicists, but I will give a sketch of the answer, drawing on my own research and that of my colleagues.

All fields of inquiry yield knowledge, and knowledge is a good thing, something it is right to value and to seek. But physics is unique and unparalleled in the sciences for the quantity of knowledge it yields. This knowledge is not greater because the realm of physics is larger than, say, that of biology—as the cosmos is larger than the biosphere. Rather, it is because how much knowledge a theory contains is determined by the “fundamentality” of the objects, properties, and relations the knowledge concerns.

Here is an analogy that might give a clearer picture of my view: If you learn an apple is a fruit, you learn more about it than if you learn it belongs to your friend Affan. Each is a fact about the apple, to be sure, but being a fruit is a fundamental property of the apple whereas belonging to your friend Affan isn’t.

All fields of inquiry contribute to bringing the many-splendored world into focus. But physics descries its most fundamental aspects. By doing so, it can tell us more about the world than anything else can. If knowledge is a good thing, and more of it is better, physics deserves special support independently of the practical benefit it may bring. — Nick Treanor